We recently attended a conference where the sales for AI panel combined Agents with AI Automations with Automations themselves as if it was one word. While we get that those words get tossed around like synonyms, MCP thrown into the loop too, they aren't.

They approach the same end goal, they get work done with software, but they have very different approaches. Don't be like those people. This is how they are different.

What is Automation?

Automation is rule-driven execution. You do X, you get Y. It's a pre-planned workflow that traditionally makes tools play nice with each other. For instance, you get a lead, and while team A is moving to Salesforce, you want the stuff in your Hubspot account too. You can use an automation to make both platforms talk to each other. Or as Make puts it, “workflow automation is the process of executing procedural workflows automatically, with little to no human involvement.” (Make, 2024)

Where AI Automation comes in

When you place an LLM inside a workflow, the workflow stays deterministic while one step becomes probabilistic.

What changes with an LLM in the loop.

You can use the model to do stuff like:

- Classify: route emails by topic, triage tickets by severity.

- Extract: pull entities from text into fields; summarize long notes to a brief.

- Generate: draft a reply, produce a first-pass description, write a regex given examples.

Google framed it well with:

“The beauty of workflows is that it not only helps you to call and orchestrate generative AI but it also helps you to connect generative AI.” (Google Cloud, n.d.)

When outputs must be structured, lean on techniques like structured generation to make formats predictable. You can use JSON as an output to fill in certain parts, which means that later on in your flows, you can reference the output directly.

When to switch back to rules.

If the cost of error is high, inputs are wildly ambiguous, or you’re in a cold-start with no examples, keep the LLM scoped or gated. Use confidence thresholds, human approvals, and fallbacks to template-based rules.

Where Agents started being popular

The jump from “chat assistant” to “tool-using planner” happened fast.

2023 to 2025 shipped a wave with assistants learned to call tools, remember context, and chain steps. Teams leaned in because multistep tasks needed judgment and brittle rules were piling up. Integration fatigue didn’t help - every new app meant bespoke glue.

The hype muddied language. People called everything an “agent.” Most were enhanced assistants: one tool call with no planning and memory. As Microsoft frames it, agents “can work alongside or for you, handling tasks with tailored expertise”, which implies planning, tool choice, and some autonomy. (Microsoft WorkLab, 2024) But while agents can use different automation schemas to interact with different tools, it's not quite it.

What are agents, actually

An agent is a goal-seeking system that can plan, choose tools, and adapt based on feedback to move a task forward over multiple steps.

Every useful agent carries five essentials. First, a planner that decomposes goals into steps and adjusts when results miss. Second, memory is short-term to hold working context or long-term to avoid relearning. Third, a tool catalog with clear contracts, cost/latency notes, and limits. Fourth, policies and constraints that define what’s allowed, where, and with whose approval. And fifth, an evaluator that checks intermediate and final outputs, triggers retries, or escalates to a human.

Surface-level autonomy.

You can run agents on-rails, pausing at checkpoints after each step for review—safest, but slower. A mixed-initiative mode adds human-in-the-loop approvals only at risky moments—usually the right default. Fully autonomous runs are rare and tightly bounded with sandboxes, budgets, and timeouts.

Fit and limits.

Agents shine at exploration, orchestration, and decision support. The tradeoffs are latency and variance. You manage both with guardrails, evaluation, and crisp scopes. Governance is the tax you pay so the system stays useful.

| Capability | What it enables | Risk | Mitigation |

|---|---|---|---|

| Tool use | Real work beyond text | Irreversible actions | Sandboxes, dry-runs, budgets |

| Planning | Multi-step progress | Loops, detours | Step caps, convergence checks |

| Memory | Personalization, recall | Privacy, drift | Data scopes, TTLs, redaction |

| Generation | Drafts, synthesis | Hallucination | Structured outputs, evals |

| Autonomy | Speed without pings | Off-policy behavior | Guardrails, approvals, logs |

What about MCPs

The Model Context Protocol (MCP) is an open standard that exposes tools, resources, and prompts to AI clients (assistants or agents). Servers offer capabilities; clients discover and invoke them over a consistent contract. (Model Context Protocol, 2024)

On the server side, MCP registers tools and resources with clear schemas, authentication, and rate limits. On the client side, assistants or agents connect to those servers, request capabilities, invoke tools, and stream results back into their run. The result is a clean separation of concerns: servers declare what they can do; clients decide when and how to use it.

Why it matters.

Standard contracts reduce glue, improve security/observability, and make tool catalogs portable across assistants and agents. It’s the plumbing that lets your agent use the same invoicing tool as your assistant - without two integrations. (Anthropic, 2024; Model Context Protocol, 2024)

What MCP is not.

Not an agent. Not a workflow engine. It makes those things possible by wiring safe, discoverable access to the stuff that does the work like Claude or ChatGPT or Ollama.

If you have a hammer, everything looks like a nail

Don’t overbuild. If you’re just connecting different apps together, automation wins.

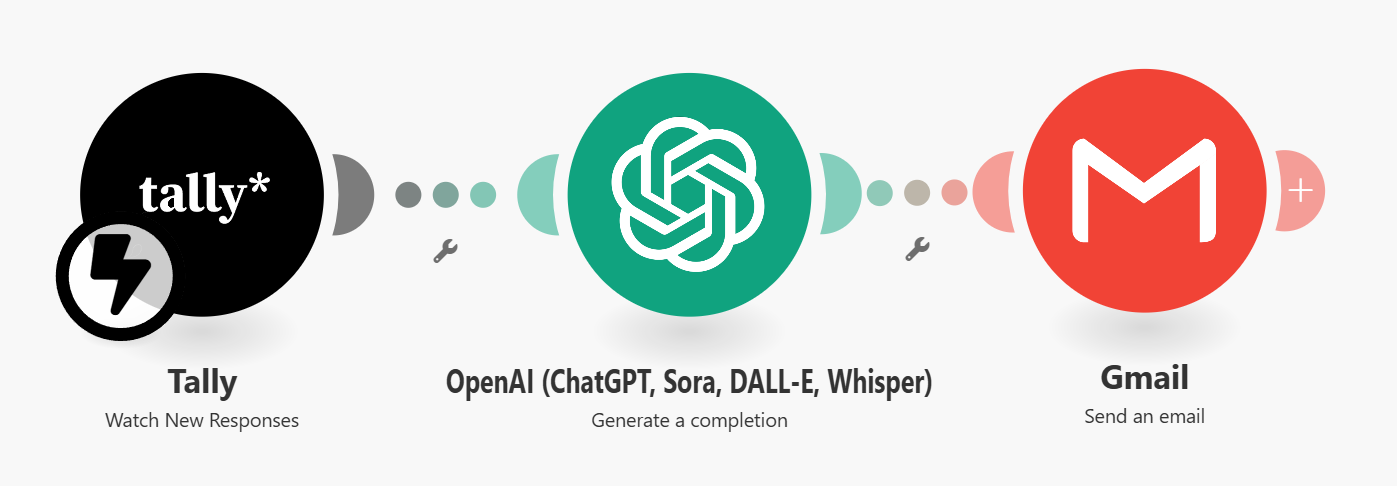

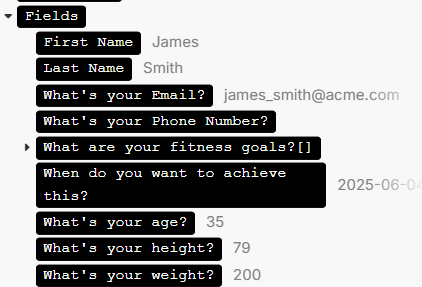

If you need something generative, for example a form fill comes in and you want to extract the data, making this an AI automation works perfectly.

If the flow needs to judge what is the right step and take those steps, an agent with guardrails is a solid pick.

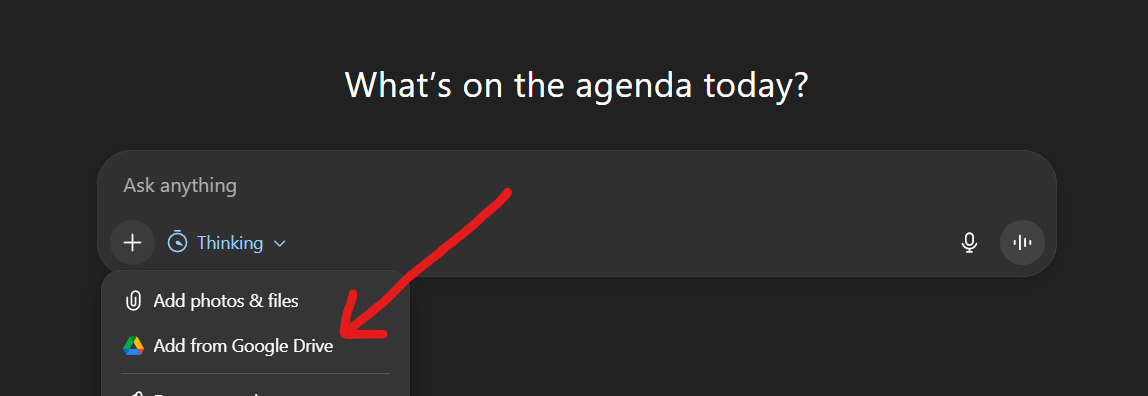

And if you want to give your AI tool access to different solutions while you are using it, connecting it to a MCP is enough.

One last caution: the more AI you add, the more you must manage variance and context decay. Use structured outputs, time-boxed plans, budgets, and approvals. Keep humans in the loop where stakes are high. (DeepMind, 2024; Microsoft WorkLab, 2024)

References

Anthropic. (2024, November 25). Introducing the Model Context Protocol (MCP). https://www.anthropic.com/news/model-context-protocol

Gao, M., Bu, W., Miao, B., Zhang, Y., & Zhang, C. (2024, November 17). Generalist virtual agents: A survey on autonomous agents across digital platforms (arXiv:2411.10943). arXiv. https://arxiv.org/abs/2411.10943

Google Cloud. (n.d.). Orchestrate generative AI with Workflows [Video]. (Retrieved October 14, 2025). https://cloud.google.com/workflows/docs/generative-ai

Google DeepMind. (2024, April 19). The ethics of advanced AI assistants. https://deepmind.google/discover/blog/the-ethics-of-advanced-ai-assistants/

Make. (2024, May 30). Workflow automation: How to put your work on autopilot. https://www.make.com/en/blog/workflow-automation

Microsoft WorkLab. (2024, November 19). What are AI agents, and how do they help businesses? https://www.microsoft.com/en-us/worklab/ai-agents

Model Context Protocol. (2024, November 5). Specification 2024-11-05. https://modelcontextprotocol.io/specification/2024-11-05

Model Context Protocol. (2025, June 18). Specification 2025-06-18. https://modelcontextprotocol.io/specification/2025-06-18

Tribe AI. (2024, October 10). A gentle introduction to structured generation with Anthropic API. https://tribe.ai/blog/structured-generation-anthropic