It often starts late. A DM after midnight that sounds like it knows your week. The cadence is right. The compliments land with surgical accuracy. You answer because it feels easier than sleep. You keep answering because the replies feel like a mirror - curated, warm, and slightly addictive. You call it curiosity. They call it conversion.

Catfishing isn’t new. What’s new is speed, scale, and the intimacy physics of generative systems that can learn your voice in minutes and your vulnerabilities in hours. In 2022, people in the U.S. reported $1.3 billion in losses to romance scams, a figure that was already a doubling over 2020 and a signal that emotional fraud travels faster than our defenses (Federal Trade Commission, 2023). The following year, the FTC’s data book recorded $1.14 billion in romance-scam losses, the highest median loss among imposter scams (Federal Trade Commission, 2024). Money is the small story. The larger one is time - and how much of it a founder will give away when a machine feels like kin.

What you’re seeing in your inbox has a name in the literature: Intimacy Manipulated Fraud Industrialization. IMFI for short. Researchers describe it as romance fraud made corporate, chat factories, scripts, shift handoffs, internal CRMs, and KPIs for “affection velocity.” In one 2024 study in Computers in Human Behavior, Wang and colleagues write that IMFI is “industrialized through enterprise business practices, software platforms, and customer service processes” (Wang & Topalli, 2024). It’s not a loner in a hoodie. It’s an operation with product, growth, and retention targets that is optimized by models that never get tired and never take offense.

One persona, many operators, all stabilized by an LLM that keeps style consistent and mood calibrated.

If you run a small company, you live in open loops. AI catfishing exploits that loop. Tools that map public breadcrumbs to tone, tempo, and private folklore. A machine only needs your investor thread, a podcast clip, and last week’s launch post to fake context. “With the help of AI, creating a fake profile is just a few clicks away,” notes the Partnership on AI in a 2025 brief on online dating and deception (Partnership on AI, 2025). The “profile” isn’t just a face. It’s a language. It echoes your slang, your pace, your jokes about fundraising tasting like copper. The mimicry feels respectful until it isn’t.

You’ll hear that this is mostly “consumer” crime. That framing is comforting and wrong. The FBI’s Internet Crime Complaint Center has been explicit that confidence scams intersect with investment fraud and professional targeting. It's the pig-butchering pipeline that begins with a warm chat and ends with a dashboard showing you “paper gains” you’ll never withdraw (Federal Bureau of Investigation, 2025; Federal Bureau of Investigation, 2024). The voice used to nudge you along that pipeline doesn’t even need to be a person. It can be a bot stitched into Telegram, seeded with your public data, answering like a friend. In a May 2025 public service announcement, the FBI warned Americans not to assume messages or voice notes from supposed officials are authentic - an alert less about bureaucracy and more about how persuasive machine-made authority now sounds (Federal Bureau of Investigation, 2025).

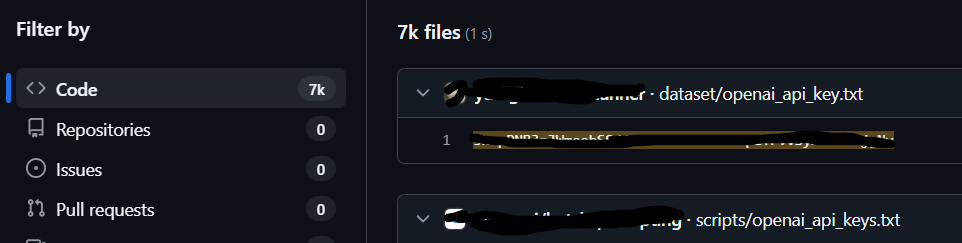

Founders underestimate how many of their days are already on the record, whether it's their hiring posts, their conference Q&A, their parent jokes in an interview. That’s enough to generate a face that reverse-image search won’t catch and a chat history that feels unedited. In early 2024, Bloomberg reported a wave of AI-generated dating profiles with unique, photorealistic images that avoid the telltale recycling of stock models (Bloomberg, 2024). When the picture is synthetic, there is nothing to reverse image search against. When the writing is synthetic, there is nothing to misspell. The typos vanish. The story holds. The pressure you feel to trust it rises.

The psychology isn’t magic. It’s statistics wrapped in tenderness. Script libraries capture the arcs that keep people engaged - banter, near-miss vulnerability, a narrated need. “AI catfishing is increasing and is likely to rise further,” said cybersecurity expert Jessica Barker in 2024, noting how the tech enables both “improve your odds” tinkering and “deeply manipulative and damaging scams” (Barker, 2024). You can feel the gradient in your own DMs where the way a compliment becomes eventually a request. It’s product-market fit for your attention.

Here’s the industrial part that matters. The chat you’re having at 1:12 a.m. could be handed off across time zones. One persona, many operators, all stabilized by an LLM that keeps style consistent and mood calibrated. Wang & Topalli (2024) describe structured roles as moderators, “customer service,” even QA processes to supervise pipelines of intimacy and extraction. That factory model means the con doesn’t stall when a human gets tired or sloppy. It also means your conversation is stitched to a funnel of affection → isolation → capital. That’s why the FTC keeps seeing losses that outpace other fraud categories on a per-victim basis (Federal Trade Commission, 2024). The hours you give are not free. They are mapped to revenue.

The entry points have multiplied. “Wrong-number” texts that become gentle friendships. Cold DMs that feel like fan mail. Even “we met at…” openers that reference a real venue from your feed. The FTC’s April 2025 text-scam spotlight describes how innocuous misdirects evolve into relationships with romantic undertones, then into investment pitches routed to bogus platforms (Federal Trade Commission, 2025a). It reads like nothing and becomes everything. By the time money shows up, the emotional debt is older than you remember.

There’s also the ambient climate: loneliness used as an infrastructure layer for fraud. As Wired argued earlier this year, the “loneliness epidemic” is now also a security problem, with nearly $4.5 billion in reported U.S. losses to digital romance scams over the past decade (Greenberg, 2025). You don’t need to be lonely to be groomed; you only need to be online with good intentions and a schedule. AI compresses the time it takes to feel seen. It makes seeing easy. It makes being seen a service.

If you manage payroll and product, it’s tempting to separate your personal life from the business. The actors feasting on AI intimacy don’t respect that seam. They pivot from late-night affection to “can I help your startup?” They ask for your deck, your user counts, your “private” roadmap, leveraging a real detail to claim a shared future. In the FTC’s 2025 fraud summary, bank transfers and cryptocurrency again dominated loss totals - methods common to romance-investment hybrids where relationship is the hook and a trading app is the net (Federal Trade Commission, 2025b; Federal Trade Commission, 2024). What disappears isn’t only money. It’s momentum. The quarter you planned becomes recovery.

For those who still think this is only a “consumer” problem: ask why the stories so often include founders, freelancers, and SMB executives. Catfishing maps neatly to the rhythms of leadership - public visibility, constant messaging, a permanent deficit of time. It also maps to the psychology of making things. Builders over-index on optimism. On the belief that new connections are how you survive. IMFI exploits that bias the way ad tech exploits dopamine. It learns what you like, then sells you more of it. The product is a person that isn’t.

We should be honest about how credible the theater has become. Sometimes there is a real face and a fake backstory. Sometimes it’s the other way around. Sometimes the voice note is a clone of someone you actually know. The FBI’s May 2025 PSA about impersonation of U.S. officials wasn’t a curiosity; it was an index of how cheap it now is to deliver authority via audio at scale and how ordinary people misrecognize it under stress (Federal Bureau of Investigation, 2025). The same dynamics move in romance. You don’t need an Oscars-grade deepfake. You need thirty seconds of “close enough,” delivered when your guard is down.

This isn’t a morality tale. People use AI to script charm on dating apps for the same reason they hire editors for emails: fear of being unchosen. But somewhere between a grammar fix and a synthetic life is a line that matters. “AI catfishing is increasing,” Barker warned. The reasons range “from people simply seeking to improve their chances of success on the apps to deeply manipulative and damaging scams” (Barker, 2024). The same gradient shows up in founder DMs. A little assist becomes an actor or soulmate who wants to “support your mission.” A week later, you’re screensharing a wallet. Or explaining to your team why you were distracted when it counted.

There’s a final tension here that matters for small companies. The more you share to grow, the more substrate a machine has to love-bomb you convincingly. The more precise your public identity, the more precise the mimicry. The FTC’s trend data shows the macro picture: $12.5 billion in reported fraud losses across categories in 2024, with investment scams leading and imposter scams close behind (Federal Trade Commission, 2025b). The micro picture is one founder, one phone, one night. The extraction isn’t brute force. It’s consent. It’s you choosing to keep talking because the conversation feels like oxygen. Until it feels like debt.

The mechanics are consistent. Find the person who has to be open, match their rhythm, mirror their hope, and route the relationship into a payment rail or a private doc. The harm is cumulative, quiet, and professionally contagious. Your next decision carries the residue. Your team feels it. Your customers feel it. And the system that did it is already onboarding the next you, polite and interested and “here for you.”

That’s the part that’s hardest to sit with. Not that a stranger lied. That the lie was arranged to sound like care.

References

- Bloomberg News. (2024, February 14). Scammers litter dating apps with AI-generated profile pics. Bloomberg. https://www.bloomberg.com/news/newsletters/2024-02-14/scammers-litter-dating-apps-with-ai-generated-profile-pics

- Coffey, H. (2024, April 22). Am I falling for ChatGPT? The dark world of AI catfishing on dating apps. The Independent. https://www.independent.co.uk/life-style/ai-catfishing-dating-apps-chatgpt-b2531460.html

- Federal Bureau of Investigation. (2024, September 24). Cryptocurrency investment fraud a growing problem in Maryland. FBI Baltimore Field Office. https://www.fbi.gov/contact-us/field-offices/baltimore/news/cryptocurrency-investment-fraud-a-growing-problem-in-maryland

- Federal Bureau of Investigation. (2025, March 6). The FBI’s Operation Level Up takes a proactive approach against crypto scams. FBI Miami Field Office. https://www.fbi.gov/contact-us/field-offices/miami/news/the-fbis-operation-level-up-takes-a-proactive-approach-against-crypto-scams

- Federal Bureau of Investigation, Internet Crime Complaint Center. (2025, May 15). Senior US officials impersonated in malicious messaging campaign (Alert No. I-051525-PSA). https://www.ic3.gov/PSA/2025/PSA250515

- Fair, L. (2024, February 13). “Love stinks” – when a scammer is involved. Federal Trade Commission Business Blog. https://www.ftc.gov/business-guidance/blog/2024/02/love-stinks-when-scammer-involved

- Fletcher, E. (2023, February 9). Romance scammers’ favorite lies exposed. Federal Trade Commission Data Spotlight. https://www.ftc.gov/news-events/data-visualizations/data-spotlight/2023/02/romance-scammers-favorite-lies-exposed

- Federal Trade Commission. (2025, March 10). New FTC data show a big jump in reported losses to fraud to $12.5 billion in 2024 [Press release]. https://www.ftc.gov/news-events/news/press-releases/2025/03/new-ftc-data-show-big-jump-reported-losses-fraud-125-billion-2024

- Federal Trade Commission. (2025, April 16). New FTC data show top text message scams of 2024; Overall losses to text scams hit $470 million [Press release]. https://www.ftc.gov/news-events/news/press-releases/2025/04/new-ftc-data-show-top-text-message-scams-2024-overall-losses-text-scams-hit-470-million

- Federal Trade Commission, Division of Consumer Response and Operations Staff. (2025, April 14). Top text scams of 2024 [Data Spotlight]. https://www.ftc.gov/news-events/data-visualizations/data-spotlight/2025/04/top-text-scams-2024

- Federal Trade Commission. (2024, May). Who’s who in scams: A spring roundup [Data Spotlight]. https://www.ftc.gov/system/files/ftc_gov/pdf/data-spotlight-who-s-who-in-scams-a-spring-roundup.pdf

- Khan, T. (2025, February 12). Love on the mainframe: How AI is changing online dating. Partnership on AI. https://partnershiponai.org/love-on-the-mainframe-how-ai-is-changing-online-dating/

- Newman, L. H., & Burgess, M. (2025, February 13). The loneliness epidemic is a security crisis. WIRED. https://www.wired.com/story/loneliness-epidemic-romance-scams-security-crisis/

- Wang, F., & Topalli, V. (2024). The cyber-industrialization of catfishing and romance fraud. Computers in Human Behavior, 154, 108133. https://doi.org/10.1016/j.chb.2023.108133