The email lands at 8:02 a.m. It sounds like your CEO. The cadence. The shorthand. A nudge to “push the wire before noon.” Not dramatic. Efficient. It even references last Friday’s all-hands. You feel the reflex in your fingers. Move the money. Keep the week clean.

Pause.

This isn’t the old spam you know how to dodge. This is bespoke. Your name in the subject line. A tone that sounds like a person you’ve shared rooms with. And the artifact isn’t a single email anymore. It’s an assembled kit – an LLM writing in your boss’s voice, an HTML page that mirrors your vendor portal, a PDF “invoice” with the right logo and kerning. The work isn’t sloppy. It’s polished. “If you extrapolate the commonly understood use cases of GenAI technology,” Verizon wrote, “it could potentially help with the development of phishing [and] malware” (Verizon, 2024, p. 16). That reads like an understatement when you’re staring at a perfect fake.

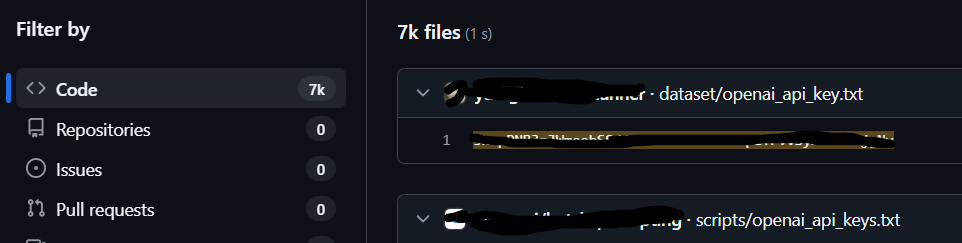

What’s changed is not the scam. It’s the supply chain of deception. Where a human once labored over a single lure, a kit now mass-produces variations that read like inside jokes. Black-market LLMs – WormGPT, FraudGPT – are advertised as point-and-shoot tools for “crafting spear phishing emails” and other offensive tasks (SecureWorld, 2023). They lower the barrier from “skilled writer with patience” to “anyone with a credit card and a forum login.” Behind the kits: prompt recipes, branded templates, cloned landing pages, and copy tuned on your company’s public breadcrumbs. It’s polymorphic by default.

The sales pitch to criminals is blunt. One dark-web ad framed FraudGPT as “exclusively being used for offensive purposes” – not curiosity, not research (SecureWorld, 2023). Another explainer distilled WormGPT as a black-hat fork trained to “bypass… guardrails,” tuned for BEC outcomes (Infosecurity Europe, 2023). This is the quiet part out loud: social engineering has always been a writing problem. Now the writing is instant, tireless, and uncannily familiar. And it doesn’t arrive alone. A well-made kit choreographs channels – email, a timed Teams ping, an SMS – to close the loop. Microsoft’s threat team described Storm-1811 using Teams calls and messages to impersonate IT/help desk, stitching phone and chat into one social-engineering arc (Microsoft, 2024).

The numbers around it feel clinical until you map them to a Tuesday morning. The FBI’s Internet Crime Complaint Center recorded $16.6 billion in cybercrime losses in 2024, a 33% jump, with employees duped into moving money a leading driver (Reuters, 2025). IC3’s BEC advisory called it “a $55 billion scam,” noting a further 9% rise in identified global exposed losses between December 2022 and December 2023 (FBI IC3, 2024). These are not the staggering heists you read about once a decade. They are the everyday wire. The invoice that almost passed. The HTML “portal” that matched your vendor down to the shade of blue in the favicon.

The craft has matured. Kits scrape public signals – press releases, LinkedIn, GitHub READMEs, town-hall transcripts – then train a style. The first message sets context. The second escalates urgency. The third arrives from a different channel to “confirm.” Microsoft chronicled campaigns that timed tax-season lures with HTML attachments leading to fake download pages – realistic enough to make a target click, quiet enough to dodge a filter (Microsoft Threat Intelligence, 2024). You see the arc: a calendar exploit. A season. A plausible pretext that reads like your work life.

Why does it work on smart people? Because kits stop sounding like strangers. They sound like you. They reuse phrases you’ve said aloud on public calls. They reference a client by nickname. They mirror your punctuation. The cliché tells we trained people to spot – broken grammar, weird capitalization, greetings that feel off – are now liabilities. Good controls aged into folk wisdom. AI takes away your favorite red flags. Verizon said the threat model hasn’t changed as much as the quality and credibility of the lures: GenAI “considerably improve[s] the quality of phishing emails and websites,” even when the mechanics remain familiar (Verizon, 2024, p. 16). The copy doesn’t fight itself anymore. It flows.

There’s another layer: mutability. Polymorphic phish aren’t just A/B tests. They are C through Z in the same hour. If one variant gets blocked, the kit regenerates with different syntax, a new subdomain, a different faux “from” line, a fresh PDF hash. Your filter learns one fingerprint; the kit sheds that skin. This is why “spray-and-pray spear phishing” is not a joke. It’s an accurate phrase for personalized at scale. One hundred people receive one hundred distinct, context-aware emails. No mass pattern to catch. Nothing obvious to share as a global indicator of compromise. Just a series of almost-right notes played quickly enough to feel like music.

The kits also borrow voice. Not deepfake theater – not here – but voice as texture. A “Thanks, J.” at the end of the message because J always signs that way. A three-word phrase your VP uses when she’s stressed. The meta-signal is the tell: AI doesn’t just write sentences; it writes you. And because your organization speaks in patterns, the kit learns those patterns with embarrassing ease. The distance between tone and authority collapses. An email that sounds like power is granted power.

If you want the blunt edge of the trend, Microsoft’s 2025 Cyber Signals issue put it clearly: modern social engineering “collect[s] relevant information about targeted victims and arrang[es] it into credible lures… Various AI tools can quickly find, organize, and generate information, thus acting as productivity tools for cyberattackers” (Microsoft, 2025). That’s the logic of the kit. It treats you like a dataset. Then it writes the shortest path through you.

The routes in are ordinary. A vendor renewal. A new bank account form. A note “from IT” about a security update. The hook only bites if the rhythm feels right. So kits weaponize rhythm. The subject lines reflect your calendar. The asks land inside your attention patterns. During close week, the lures are invoices. During open enrollment, HR forms. During tax season, “W-2 copies pending.” Microsoft’s tax-season write-up is a mirror: “lures masquerading as tax-related documents provided by employers,” delivered as HTML pages that did exactly what a harried employee expects – prompt a download (Microsoft Threat Intelligence, 2024). The kit doesn’t need to be clever. It just needs to be timely.

And yes, the money still moves. In headlines, it’s an employee who sent $25 million after a fake live call. In your building, it’s $48,300 to a fresh account because a note “from the CEO” sounded casual enough to pass. The FBI’s own phrasing for BEC remains brutally simple: criminals “evolving in their techniques to access… accounts,” from small local businesses to large corporations (FBI IC3, 2024). Kits make that evolution cheap. You don’t need a crew hand-typing. You need prompts, templates, a list. Then volume. Then speed.

The email you almost moved on? It wasn’t written by your CEO. It was written by a system that understands your CEO’s footprints better than your training deck does. We keep trying to draw a bright line between “obvious fake” and “obvious real.” Kits live in the gray. Close enough is enough. Filters get better; content gets smoother. People get briefed; kits rephrase. You can see the arms race in Microsoft’s blog cadence alone: Teams abuse here; Quick Assist misuse there; new pretexts mapped to whatever the quarter offers (Microsoft, 2024; Microsoft, 2025). When the gap between sound and truth narrows, judgment becomes the battleground.

A last frame, because the scale matters. In 2024, the FBI counted nearly 860,000 reports to IC3. Losses rose a third to $16.6 billion. Reuters called out the role of “low-tech scams such as investment frauds and phishing attacks where employees were duped into transferring money” (Fung, 2025). That’s the part that should bruise: this isn’t exotic. It’s ordinary pressure applied with extraordinary mimicry. The kit meets you where you live – inbox, calendar, process – and makes a near-perfect sound. You blink. You move the money. And by noon, the thread is gone.

We like to think sophistication announces itself. That a truly advanced attack feels cinematic. AI-generated phishing kits invert that expectation. They don’t dazzle. They fit. They blend into the noise of a Monday. Their genius is restraint. Their ethic is speed. And their portrait of you – your tone, your rituals, your workday – is now a commodity available to the highest bidder.

The email at 8:02 a.m. was an imitation of trust. It did not need to fool everyone. It only needed to be good enough, fast enough, close enough to the way your world writes to itself.

That’s the part that lingers. The way it sounded like you.

References

FBI Internet Crime Complaint Center. (2024, September 11). Business Email Compromise: The $55 billion scam. https://www.ic3.gov/PSA/2024/PSA240911

Fung, B. (2025, April 23). FBI says cybercrime costs rose to at least $16 billion in 2024. Reuters. https://www.reuters.com/world/us/fbi-says-cybercrime-costs-rose-least-16-billion-2024-2025-04-23/

Microsoft. (2024, May 15). Threat actors misusing Quick Assist in social engineering attacks leading to ransomware. https://www.microsoft.com/en-us/security/blog/2024/05/15/threat-actors-misusing-quick-assist-in-social-engineering-attacks-leading-to-ransomware/

Microsoft Threat Intelligence. (2024, March 20). Targets and innovative tactics amid tax season. https://www.microsoft.com/en-us/security/blog/2024/03/20/microsoft-threat-intelligence-unveils-targets-and-innovative-tactics-amid-tax-season/

Microsoft. (2025, April 16). Cyber Signals Issue 9: AI-powered deception—Emerging fraud threats and countermeasures. https://www.microsoft.com/en-us/security/blog/2025/04/16/cyber-signals-issue-9-ai-powered-deception-emerging-fraud-threats-and-countermeasures/

SecureWorld. (2023, July 26). FraudGPT AI bot authors malicious code, phishing emails, and more. https://www.secureworld.io/industry-news/fraudgpt-malicious-ai-bot

Verizon. (2024). 2024 Data Breach Investigations Report. https://www.verizon.com/business/resources/reports/2024-dbir-data-breach-investigations-report.pdf

Infosecurity Europe. (2023, August 10). The dark side of generative AI: Five malicious LLMs. https://www.infosecurityeurope.com/en-gb/blog/threat-vectors/generative-ai-dark-web-bots.html