The most expensive lie of the AI era didn’t need malware. It needed a meeting. In early 2024, a Hong Kong finance employee at global engineering firm Arup joined a routine video call with “senior management.” The faces and voices looked right. The instructions sounded right. Minutes later, HK$200 million (~$25 million) was gone. Arup later confirmed it was a deepfake fraud and that “fake voices and images were used.” Their systems were fine; human trust wasn’t (Milmo, 2024).

For startup founders and SMB executives, that incident isn’t edge-case theater. It is a signal. Deepfake-driven schemes are no longer exotic one-offs. The FBI has warned that malicious actors now use AI-generated audio and video to impersonate “family members, co-workers, or business partners,” enabling fraud with “devastating financial losses” and reputational damage (FBI, 2024).

The lie factory goes mainstream

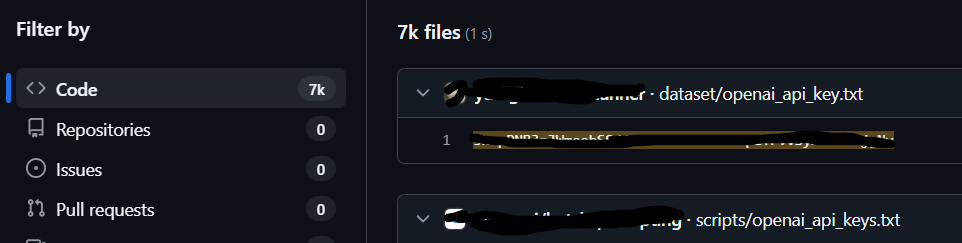

Deepfakes ride two curves that matter to operators: cost and convenience. As Arup’s CIO, Rob Greig, put it, “It’s freely available to someone with very little technical skill to copy a voice, image or even a video” (Elliott, 2025).

Law enforcement is seeing the same logic at national scale. In December 2024, the FBI’s Internet Crime Complaint Center stated plainly that generative AI “reduces the time and effort” needed to deceive at scale, boosting the believability of fraud (FBI, 2024b). In May 2025, the Bureau warned that criminals were impersonating senior U.S. officials via text and AI-generated voice to pry open personal accounts—then pivoting through those accounts to reach more targets (Vicens, 2025).

The political system has already felt the tremor. On January 22, 2024, New Hampshire voters received robocalls mimicking President Biden’s voice and telling them not to vote. State officials opened an investigation the same day (Swenson & Weissert, 2024). By May, the FCC had proposed multi-million-dollar penalties and prosecutors filed charges against the consultant behind the scheme (PBS NewsHour, 2024). Whether your business is political or purely commercial, the point is the same: authenticity cues you once trusted—voice, likeness, caller ID—are trivial to fake and easy to weaponize.

Why founders and SMB execs are ideal targets

Attackers follow ROI. SMB teams run lean, ship fast, and make more ad hoc decisions across email, Slack, Zoom, and phones. That makes “technology-enhanced social engineering” an operational risk, not just a privacy headache. In the Arup case, the attack didn’t breach software; it exploited believable presence (Milmo, 2024; Elliott, 2025).

Fraud telemetry backs this up. Sumsub’s research found global deepfake incidents surged more than tenfold from 2022 to 2023, with identity-fraud patterns shifting toward more complex, multi-step schemes. The most affected industries included online media, professional services, healthcare, transportation, and gaming—exactly the verticals where SMBs operate on thin margins and move money daily (Sumsub, 2023). The Global Cyber Alliance echoed those trends in 2025, citing a 1,740% spike in North American deepfake fraud, and noting that most organizations still lack formal protocols (Global Cyber Alliance, 2025).

Humans vs. synthetic certainty

Founders often ask, “Can’t we just tell?” Evidence says: not reliably. In a PLOS ONE study, participants exposed to genuine and deepfake speech identified fakes only 73% of the time, and training barely helped—accuracy hovered just above chance (Mai et al., 2023). McAfee’s consumer research rhymes with this: “70% of people said they were either unsure if they would be able to tell or believe they wouldn’t be able to,” and victims describe cloned audio as “completely her voice” with the same inflection (McAfee, 2023, pp. 10–11).

That gap between confidence and capability is fuel. Criminals don’t need perfect illusions; they need plausible ones that arrive at the right moment, inside the right channel, wearing the right identity. Greig’s phrase “freely available” is the uncomfortable truth (Elliott, 2025).

“It’s freely available to someone with very little technical skill to copy a voice, image or even a video”

The attack patterns that matter

The patterns now affecting SMBs are simple to describe and brutal to absorb.

A video call with a familiar face that asks for a transfer. That was the Arup playbook: a fake CFO, other “colleagues,” a routine request, and an employee conditioned to comply. Hong Kong police and multiple outlets documented how the entire meeting was fabricated end-to-end (Milmo, 2024).

A phone call from a cloned voice—sometimes seeded with stolen caller ID—asking for credentials, 2FA codes, or urgent funds. The FBI’s May 2024 notice spells out that attackers now use voice/video cloning to impersonate people you already trust and to move money or extract secrets (FBI, 2024).

A text that starts “like a friend,” then pushes you to “a secure portal.” Reuters summarized the FBI’s 2025 advisory: criminals build rapport, then steer victims to hacker-controlled sites to harvest logins and escalate (Vicens, 2025).

A reputational nuke that detonates on your timeline. Election-season robocalls are a public example, but the same mechanics are showing up in local markets, vendor disputes, and employment feuds. Synthetic speech and video lower the cost of manufacturing a “gotcha” clip that clients and investors will see long before you can refute it (Swenson & Weissert, 2024; FBI, 2024b).

Why this is worse than “just another scam”

Deepfakes don’t only steal money; they erode the substrate that SMBs rely on to move fast—shared reality. As Hany Farid writes, the “insidious side-effect of deepfakes and cheap fakes is that they poison the entire information ecosystem,” casting doubt on any visual evidence (Farid & McIsaac, 2024).

That erosion hits founders where it hurts. Sales calls. Investor updates. Vendor negotiations. Hiring. Collections. Every channel that depends on a common sense of who is who and what is true turns brittle under deepfake pressure. And once customers and partners internalize that brittleness, even authentic messages can be dismissed—the liar’s dividend, paid by you.

What the next quarter looks like

There’s a reason the Arup case and the New Hampshire robocall sit side-by-side in this story. They reveal the same playbook at different scales: attack trust, weaponize presence, and push the target to act before the lie collapses. That is the core security risk for startups and SMBs in 2024–2025. Not zero-days. Zero-doubts.

You don’t need to be a Fortune-50 to be interesting. If your business moves funds, handles client data, or operates on social capital, you are interesting enough. That includes professional services, healthcare networks, boutique media, shops running logistics, gaming studios, and any company where the value chain still runs through people (Sumsub, 2023).

Consider the Arup confirmation again. “None of our internal systems were compromised,” the company said (Milmo, 2024). That line should chill operators. It says the quiet part out loud: software hardening is necessary but not sufficient. The risk has shifted from code execution to credibility execution. The breach is belief.

Closing the circle

Founders pride themselves on speed, instinct, and conviction. Deepfakes hunt exactly those strengths. They don’t need to break your stack to break your company. They just need to sit across from you, look like authority, and ask.

On the day the lie shows up in your inbox, your meeting link, or your voicemail, the only question that matters is the one leaders least want to ask: how sure are you that “real” is real.

References

Elliott, D. (2025, February 4). ‘This happens more frequently than people realize’: Arup chief on the lessons learned from a $25m deepfake crime. World Economic Forum. https://www.weforum.org/stories/2025/02/deepfake-ai-cybercrime-arup/

Federal Bureau of Investigation. (2024, May 8). FBI warns of increasing threat of cyber criminals utilizing artificial intelligence. https://www.fbi.gov/contact-us/field-offices/sanfrancisco/news/fbi-warns-of-increasing-threat-of-cyber-criminals-utilizing-artificial-intelligence

Federal Bureau of Investigation. (2024, December 3). Criminals use generative artificial intelligence to facilitate financial fraud (IC3 Public Service Announcement). Internet Crime Complaint Center. https://www.ic3.gov/PSA/2024/PSA241203

Farid, H., & McIsaac, C. (2024, August 21). AI-generated misinformation: Silent saboteur of the 2024 election? Divided We Fall. https://dividedwefall.org/ai-generated-misinformation-election-2024/

Mai, K. T., Bray, S., Davies, T., & Griffin, L. D. (2023). Warning: Humans cannot reliably detect speech deepfakes. PLOS ONE, 18(8), e0285333. https://doi.org/10.1371/journal.pone.0285333

McAfee. (2023, March 20). Beware the Artificial Impostor: A McAfee Cybersecurity Artificial Intelligence Report. https://www.mcafee.com/content/dam/consumer/en-us/resources/cybersecurity/artificial-intelligence/rp-beware-the-artificial-impostor-report.pdf

Milmo, D. (2024, May 17). UK engineering firm Arup falls victim to £20m deepfake scam. The Guardian. https://www.theguardian.com/technology/article/2024/may/17/uk-engineering-arup-deepfake-scam-hong-kong-ai-video

PBS NewsHour. (2024, May 23). Political consultant behind AI-generated Biden robocalls faces $6 million fine and criminal charges. https://www.pbs.org/newshour/politics/political-consultant-behind-ai-generated-biden-robocalls-faces-6-million-fine-and-criminal-charges

Sumsub. (2023, November 28). Global deepfake incidents surge tenfold from 2022 to 2023. https://sumsub.com/newsroom/sumsub-research-global-deepfake-incidents-surge-tenfold-from-2022-to-2023/

Global Cyber Alliance. (2025, May 28). Seeing isn’t believing: Staying safe during the deepfake revolution. https://globalcyberalliance.org/about-deepfakes/

Vicens, A. J. (2025, May 15). Malicious actors using AI to pose as senior U.S. officials, FBI says. Reuters. https://www.reuters.com/world/us/malicious-actors-using-ai-pose-senior-us-officials-fbi-says-2025-05-15/

Swenson, A., & Weissert, W. (2024, January 22). Fake Biden robocall being investigated in New Hampshire. Associated Press. https://apnews.com/article/new-hampshire-primary-biden-ai-deepfake-robocall-f3469ceb6dd613079092287994663db5