You’re on your phone between meetings. An ad promises “ChatGPT Pro: free, instant, no wait.” It looks clean. The icon is convincing. You tap. A minute later your brand’s Facebook ad account is spending money you didn’t approve, your browser feels slower, and your CTO asks why a mystery app now has permissions on the company Instagram. The exploit wasn’t dramatic. It was ordinary. That’s how it wins.

This is about how criminals weaponize the gloss of AI helpers, the “quick access” extensions, “productivity” add-ons, “official” mobile apps, to reach your business through consumer habits. It doesn’t start in your SOC. It starts in the feed, the app store, the Chrome Web Store. It starts where you and your team live when you’re tired. Where the defense is just a thumb.

The lure is predictable because we are. Early 2023 saw the first wave: fake “ChatGPT” extensions in official stores, complete with working chatbot panes to lower suspicion. Guardio Labs wrote it plainly: a Chrome extension offering “quick access to fake ChatGPT functionality was found to be hijacking Facebook accounts and installing hidden account backdoors,” even silently registering a rogue Facebook app to grant the attackers “super-admin permissions” (Tal, 2023). The extension connected to a real chatbot API so it felt legitimate, while quietly harvesting cookies and business account details in the background (Tal, 2023). You didn’t have to be reckless to fall for it. You just had to be moving.

Platform defenders noticed the trend. Meta’s CISO summarized the pattern with unusual clarity: “Since March alone, our security analysts have found around 10 malware families posing as ChatGPT and similar tools… some of these malicious extensions did include working ChatGPT functionality alongside the malware” (Rosen, 2023). The point isn’t novelty; it’s camouflage. Real functionality masks the theft. Meta also said they “detected and blocked over 1,000 of these unique malicious URLs” from being shared across their apps, but the operators quickly pivoted to new themes like Bard and TikTok marketing support (Rosen, 2023). Trend rides trend. The mask changes; the hand stays the same.

If the browser is the door, the app store is the bright foyer with a slippery floor. WIRED’s reporting on “fleeceware” documented how scam apps pretended to offer ChatGPT access, leaning on free trials that turned into recurring subscriptions, no malware needed, just quiet extraction. “We define fleeceware as something that charges an extraordinary amount of money for a feature that is available freely or at very low cost elsewhere,” Sophos researcher Sean Gallagher said (Newman, 2023). Some of these apps even used OpenAI’s API, then truncated responses to push you into paying. The con wasn’t technical. It was behavioral monetizing confusion about what’s official and what’s not.

At the same time, supply-chain opportunists aimed at the developer layer: the folks who wire integrations when marketing needs an AI widget “today.” In late 2024, Kaspersky reported a year-long PyPI campaign whose packages posed as wrappers for ChatGPT and Claude. They worked, enough to pass a sniff test, and also dropped the JarkaStealer infostealer to lift browser data and session tokens (Kaspersky GReAT, 2024). There’s a grim symmetry here: the same “functional mask + hidden siphon” motif plays on both sides of the stack. Consumer-facing and developer-facing.

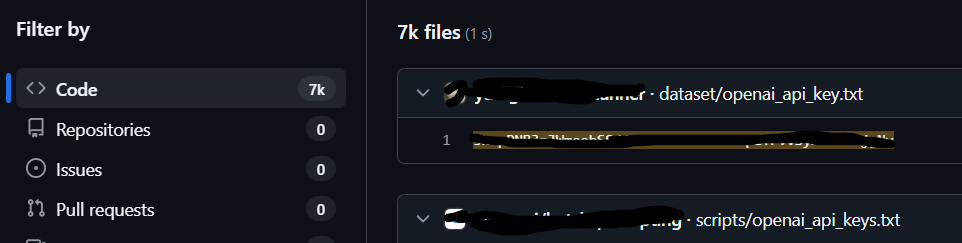

Numbers help cut through denial. ESET’s telemetry is blunt: “ESET telemetry in H2 2023 recorded blocking over 650,000 attempts to access malicious domains whose names include the string chapgpt or similar text,” many linked to insecure “bring your own key” web apps and malicious extensions (ESET Research, 2023). One example ESET named asked users to paste their OpenAI API key into a page that then sent the key to its own server; a Censys sweep suggested copies of that app on thousands of servers (ESET Research, 2023). The criminals don’t need your crown jewels if they can bill your key and bleed your ad accounts. Theft becomes a metered service.

Why does this hit founders and SMB execs so hard? Because your company is braided into your personal devices and accounts. Because the same laptop opens Figma, payroll, and your kid’s school portal. Because your Facebook Business Manager funds the top of your funnel, and that Chrome profile is already authenticated to everything. Guardio’s analysis showed the risk with uncomfortable specificity: the fake extension abused the browser’s authenticated context to call Meta’s Graph API, enumerate your ad accounts, then wire in a malicious “backdoor” app with full permissions - all while giving you a cute little chatbot popup so you felt you got what you installed (Tal, 2023). The social engineering was the interface. The persistence was the permission. The damage was the spend.

If you’re wondering whether this is “just ads,” reframe it. A hijacked business account is a broadcast tower you don’t control. It can run paid campaigns that impersonate you to your own customers, spread malware at scale, and trigger platform policy violations that throttle or ban legitimate reach. Axios covered one operation where a fake ChatGPT extension exposed credentials for “at least 6,000 corporate accounts and 7,000 VPN accounts,” turning an app-store curiosity into a corporate credential breach (Axios, 2023). Advertising budgets are cash; customer trust is equity. Both are on the table when the lure is an “AI helper.”

The email channel mirrors the same theatre. During 2024, multiple firms tracked OpenAI-impersonation campaigns pushing victims to “update payment information” for supposed ChatGPT subscriptions. It was a simple brand hijack that rode invoice anxiety and the ubiquity of SaaS billing (Barracuda, 2024). It’s not sophisticated. It works because your finance stack is noisy. In the noise, authority wins. If the logo looks right and the cadence feels familiar, the link gets a click. In the click, credentials move.

“Why would Apple and Google let this through?” Because much of this isn’t malware in the narrow sense. WIRED called it out: many of the apps avoid “technically invasive” behavior that would trigger automated rejection, and when stores do take them down, the operators relist variants with minor changes (Newman, 2023). On the browser side, the “quick access” extensions often do provide quick access. That’s the trick. You’re using real features while the infostealer threads your accounts. On social platforms, Meta’s own reporting underscored the whack-a-mole dynamic: more than 1,000 malicious URLs blocked, families of malware shifting lures from ChatGPT to Bard and other trendy covers (Rosen, 2023). The audit trail is clear; the surface keeps renewing.

Developers aren’t exempt because the fraudsters speak your language. The PyPI case is instructive: packages named like productivity shortcuts, a README that mentions API convenience, legitimate outputs when you test locally, and a stealer in the payload (Kaspersky GReAT, 2024). ESET’s threat notes add a quiet detail: some malicious extensions import a remote tracker script that phones home with a user ID and extension details; if the server responds with a URL, the extension opens it in a new tab. The “feature” is now a command-and-control path, disguised as UX (ESET Research, 2023). It’s not a zero-day. It’s a design choice bent sideways.

Meanwhile, classic news-style security blogs and trade press amplify, warn, and sometimes chase the story after the damage. The Hacker News covered the Facebook-hijacking extension in March 2023; the same month Guardio reported thousands of daily installs (Lakshmanan, 2023; Tal, 2023). Check Point wrote that “1 out of 25” new ChatGPT-related domains they tracked early on were malicious or potentially malicious, numbers that match ESET’s telemetry at scale (Check Point Research, 2023; ESET Research, 2023). If you lead a small company, you don’t need all the names. You need the shape. And the shape is simple: a real-looking AI wrapper that gives you something useful while taking something essential.

The governance failure mode here isn’t only technical. It’s cultural. The speed culture we celebrate creates perfect cover for look-alike helpers that promise one-click gains. The criminals don’t need to break your MFA if they can become you inside the browser you already authenticated. They don’t need to write a kernel rootkit if they can sell you a free trial with a $10/week drain. They don’t need to crack your perimeter if they can bill your API key until the credit card screams. The exploit is the shortcut. The shortcut is you.

Maybe that’s the hardest part to admit. That the attack surface expanded not just because AI got good, but because the hunger for it made every surface feel legitimate. The app with the right icon. The extension with the right name. The “OpenAI payment” email that lands on the 28th. We recognize ourselves in the mirror, and then the mirror moves.

This isn’t a sermon about slowing down. It’s a transcript of an era. Tools arrive; actors follow; platforms catch up; the surface shifts; actors return. You didn’t do anything wrong by wanting a faster stack or a helpful bot. But desire is a channel. In the channel, there are hands.

And they are very good at looking official.

References

Axios. (2023, April 14). Thousands compromised in ChatGPT-themed scheme. https://www.axios.com/2023/04/14/chatgpt-scheme-ai-cybersecurity

Barracuda Networks. (2024, October 31). Cybercriminals impersonate OpenAI in large-scale phishing attack. https://blog.barracuda.com/2024/10/31/impersonate-openai-steal-data

Check Point Research. (2023, May 2). Fake websites impersonating association to ChatGPT pose high risk. https://blog.checkpoint.com/research/fake-websites-impersonating-association-to-chatgpt-poses-high-risk-warns-check-point-research/

ESET Research. (2023). ESET Threat Report H2 2023 (pp. 12–14). https://web-assets.esetstatic.com/wls/en/papers/threat-reports/eset-threat-report-h22023.pdf

Kaspersky Global Research & Analysis Team. (2024, November 20). Kaspersky uncovers year-long PyPI supply chain attack using AI chatbot tools as lure [Press release]. https://www.kaspersky.com/about/press-releases/kaspersky-uncovers-year-long-pypi-supply-chain-attack-using-ai-chatbot-tools-as-lure

Lakshmanan, R. (2023, March 13). Fake ChatGPT Chrome extension hijacking Facebook accounts for malicious advertising. The Hacker News. https://thehackernews.com/2023/03/fake-chatgpt-chrome-extension-hijacking.html

Newman, L. H. (2023, May 17). ChatGPT scams are infiltrating Apple’s App Store and Google Play. WIRED. https://www.wired.com/story/chatgpt-scams-apple-app-store-google-play/

Rosen, G. (2023, May 3). Meta’s Q1 2023 security reports: Protecting people and businesses. Meta. https://about.fb.com/news/2023/05/metas-q1-2023-security-reports/

Tal, N. (2023, March 8). “FakeGPT”: New variant of fake-ChatGPT Chrome extension stealing Facebook ad accounts with thousands of daily installs. Guardio Labs. https://guard.io/labs/fakegpt-new-variant-of-fake-chatgpt-chrome-extension-stealing-facebook-ad-accounts-with

ESET. (2024, July 29). Beware of fake AI tools masking a very real malware threat. WeLiveSecurity. https://www.welivesecurity.com/en/cybersecurity/beware-fake-ai-tools-masking-very-real-malware-threat/