The code compiles. Tests pass. A demo dazzles. Somewhere in the happy path, a line that “looked right” lets user input flow un-escaped into a log, or an API call disables certificate checks because the model assumed a permissive default. No alarms. No friction. Just momentum, until the day the snippet becomes an entry point.

This is what AI-assisted insecure code looks like up close: plausible fragments, stitched fast, that work and are wrong. The risk is not theatrical. It’s cumulative. And it’s spreading through startups and small teams that are moving quickly with AI copilots, code chat, and “vibe coding” - the shorthand for trusting a fluent suggestion because it feels right.

A decade of software security patterns didn’t vanish. But they’re being out-paced by systems that can generate production-ready text faster than most teams can review it. Multiple independent studies, across user behavior, generated samples, and real repositories, converge on the same shape: AI makes it easier to write code, and easier to write insecure code that developers overestimate as safe (Perry et al., 2022; Pearce et al., 2021).

“Participants who had access to an AI assistant wrote significantly less secure code than those without,” a Stanford-led team reported, adding that assisted developers were also more confident in their answers (Perry et al., 2022). In a controlled evaluation of GitHub Copilot’s outputs across 89 security-relevant scenarios, researchers found that ~40% of generated programs were vulnerable (Pearce et al., 2021). Those are lab conditions, yes, but they mirror what’s now visible in the wild.

By mid-2025, a broad, model-agnostic test from Veracode escalated the finding into a number no engineering leader can ignore: “In 45 percent of all test cases, LLMs introduced vulnerabilities classified within the OWASP Top 10.” (Veracode, 2025). The language breakdown underlined how convincing the failures can be: Java failed security checks in roughly seven out of ten tasks, while Python, C#, and JavaScript landed faulty implementations in the high-30s to mid-40s percent range (Veracode, 2025). The pattern wasn’t exotic zero-days. It was familiar web sins like XSS and log injection among the most common, delivered with composure and speed (Veracode, 2025).

If you’re picturing sloppy pseudocode, adjust the mental image. The issue is not that models generate nonsense; it’s that they generate credible code that defaults to insecure choices. That’s why developers keep it. GitHub’s own research with Accenture shows teams routinely accept and merge AI suggestions; in their enterprise study, developers accepted around 30% of Copilot’s suggestions and retained 88% of the characters generated (GitHub, 2024). Incentives are aligned with shipping. The snippet that compiles is the snippet that lives.

Under the surface, three dynamics keep repeating.

First, surface fluency beats depth. Models excel at idiomatic patterns but struggle with data-flow reasoning - precisely where security lives. That’s why you see “works but unsafe” defaults: string concatenation in SQL, naive regexes, permissive CORS, missing CSRF tokens, insecure cookie flags, poor header hygiene. Veracode’s dataset is blunt on this point: models routinely missed defense-in-depth basics like XSS and log-injection mitigations, even when syntax and functionality were fine (Veracode, 2025).

Second, overconfidence becomes a bug multiplier. In the Stanford study, assisted participants did worse, but thought they’d done better (Perry et al., 2022). That gap matters. It’s the difference between adding scrutiny and waving code through. It turns code review into a rubber stamp for “looks production-ready,” because it reads like your team’s house style.

Third, hallucinated dependencies create supply-chain drift. Code models don’t just complete functions; they “remember” package names that never existed and suggest them with authority. That’s not a curiosity. It’s a threat. Recent analyses of package hallucination show models inventing imports and libraries across languages with worrying persistence (Spracklen et al., 2024/2025). The scenario writes itself: a rushed developer pip installs or npm installs a plausible-sounding package; an attacker squats the name; your build chain now trusts malware because an LLM hallucinated it (Spracklen et al., 2024/2025).

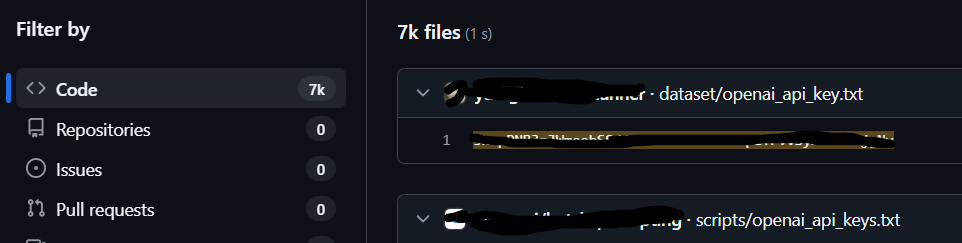

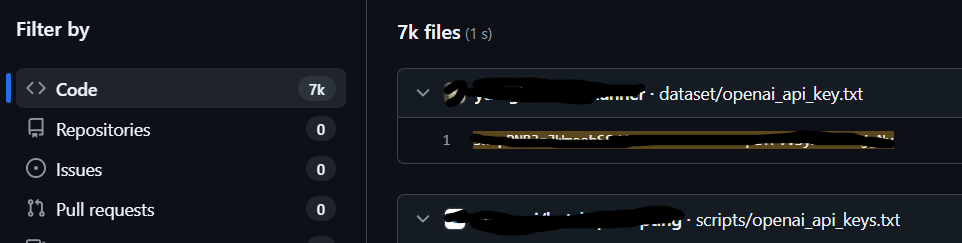

Even when the snippet is real, the training substrate can carry risk. In early 2025, researchers scanning public web corpora tied to LLM training uncovered ~12,000 live secrets, API keys and passwords, in the data itself (Truffle Security, 2025). That’s not proof any specific model memorized and will regurgitate those credentials, but it is a bright flare: insecure public code and config leaks are part of what models ingest; insecure patterns are therefore part of what they can reproduce (Truffle Security, 2025).

If you want a concrete sense of the “works/unsafe” split, read the titles on the weaknesses showing up in empirical audits of Copilot code in the wild: insufficient randomness, improper code generation control, XSS spanning 43 CWE categories in just two languages, with ~25–30% of snippets affected (Kashiparekh et al., 2025). The failures are pedestrian and damaging, not arcane (Kashiparekh et al., 2025).

Zoom out and the social mechanics are just as important as the technical ones. AI lowers the cost of boilerplate. It also lowers the cost of copy-pastable security debt. Studies that celebrate a 20–30% lift in coding throughput aren’t wrong about the speed; they’re silent about the composition of that speed (GitHub, 2024; Microsoft, 2025). If the marginal line of code is more likely to be a default config, an auth shim, or an input handler, then the marginal risk accumulates where attackers live. Volume compounds. Review budgets do not.

This isn’t confined to any one IDE or vendor. Veracode’s cross-model sweep found no steady improvement among newer or larger models on security tasks. Bigger didn’t equal safer (Veracode, 2025). And while prompt phrasing sometimes nudged models toward better patterns, that’s not a security property; it’s a style guide with wishful thinking. The OWASP Top 10 for LLM applications names this out plainly in a different context: when downstream systems trust model output without validation, you create insecure output handling - a category that reads like a mirror held up to the way teams drop generated snippets into live systems (OWASP, 2024/2025).

It would be comforting if this were just a “first-gen model” problem. It isn’t. In fact, a throughline across multiple 2024–2025 reports is stability of failure modes: models remain remarkably good at sounding secure while selecting implementations that aren’t. Press summaries of the 2025 Veracode study landed the punchline: nearly half of AI-generated code carried “glaring security flaws,” often around XSS and logging (ITPro, 2025; TechRadar Pro, 2025). Those aren’t rare edges. They’re everyday web.

If you lead a small team, the uncomfortable truth is that AI has normalized high-confidence wrongness in places where correctness used to be policed by boredom. Nobody wanted to hand-write OAuth scaffolding. Now nobody has to. But the bargain we’ve made is that a system optimized to please you linguistically will also please you aesthetically... and said aesthetics is a poor proxy for security.

Listen to the original Copilot audit again: “Of [1,689] programs, we found approximately 40% to be vulnerable” (Pearce et al., 2021). Then overlay the behavioral study: “participants with access to an AI assistant were more likely to believe they wrote secure code” (Perry et al., 2022). Then accept the 2025 baseline: 45% insecure in a broad, modern sweep (Veracode, 2025). The line threads through three methods and four years. It doesn’t get weaker.

There’s one more twist: invented packages aren’t just imaginary. The research community is now documenting how often models suggest dependencies that don’t exist, how consistently they repeat the fiction, and how readily developers act on it (Spracklen et al., 2024/2025). That’s an attack surface, not a typo. It collapses the time between suggestion and supply-chain compromise. And it’s especially dangerous in the SMB reality where one senior engineer plays architect, reviewer, and firefighter across a product that’s finally growing.

None of this is an indictment of using AI at all. It’s a description of the risk geometry when you combine speed, fluency, and human optimism. You will ship more code with AI. Some of that code will be wrong in the exact ways attackers like. The change is not that developers suddenly forgot how to validate input or set headers. The change is that they see fewer reasons to slow down when the code reads like something they would have written on their best day.

If you’ve felt that subtle shift, reviews getting quicker because the diff “looks clean,” teammates pasting in coherent “fixes,” logs filling with template-generated structure - you’re not imagining it. That is the texture of AI-assisted insecure paths propagating. And the right mental model is simple: the threat is not spectacular. It is ordinary. Ordinary code. Ordinary defaults. Ordinary assumptions is replicated at machine speed.

The breach rarely advertises itself in the snippet. It shows up later, in a timeline where everything “made sense” until it didn’t.

References

GitHub. (2024, May 13). Research: Quantifying GitHub Copilot’s impact in the enterprise (with Accenture). The GitHub Blog. https://github.blog/news-insights/research/research-quantifying-github-copilots-impact-in-the-enterprise-with-accenture/

ITPro. (2025, July 30). Researchers tested over 100 leading AI models on coding tasks—nearly half produced glaring security flaws. ITPro. https://www.itpro.com/technology/artificial-intelligence/researchers-tested-over-100-leading-ai-models-on-coding-tasks-nearly-half-produced-glaring-security-flaws

Kashiparekh, K., et al. (2025). Security weaknesses of Copilot-generated code in GitHub projects: An empirical study. arXiv. https://arxiv.org/html/2310.02059v4

Microsoft. (2025, July 24). AI-powered success—with more than 1000 stories of customer transformation and innovation. Microsoft Cloud Blog. https://www.microsoft.com/en-us/microsoft-cloud/blog/2025/07/24/ai-powered-success-with-1000-stories-of-customer-transformation-and-innovation/

OWASP Foundation. (2025). OWASP Top 10 for Large Language Model Applications (v1.1). https://owasp.org/www-project-top-10-for-large-language-model-applications/

Pearce, H., Ahmad, B., Tan, B., Dolan-Gavitt, B., & Karri, R. (2021). Asleep at the keyboard? Assessing the security of GitHub Copilot’s code contributions. arXiv. https://arxiv.org/abs/2108.09293

Perry, N., Srivastava, M., Kumar, D., & Boneh, D. (2022). Do users write more insecure code with AI assistants? arXiv. https://arxiv.org/abs/2211.03622

Spracklen, J., et al. (2024). We have a package for you! A comprehensive analysis of package hallucinations in code LLMs. ;login: The USENIX Magazine. https://www.usenix.org/publications/loginonline/we-have-package-you-comprehensive-analysis-package-hallucinations-code

Spracklen, J., et al. (2024). We have a package for you! A comprehensive analysis of package hallucinations in code LLMs. arXiv. https://arxiv.org/abs/2406.10279

TechRadar Pro. (2025, July). Nearly half of all code generated by AI found to contain security flaws—Even big LLMs affected. TechRadar Pro. https://www.techradar.com/pro/nearly-half-of-all-code-generated-by-ai-found-to-contain-security-flaws-even-big-llms-affected

Truffle Security. (2025, February 27). Research finds 12,000 “live” API keys and passwords in LLM training data. Truffle Security Blog. https://trufflesecurity.com/blog/research-finds-12-000-live-api-keys-and-passwords-in-deepseek-s-training-data

Veracode. (2025, July 30). Continuous protection for the cloud era: Veracode spotlights latest innovations for advanced software security [Press release]. https://www.veracode.com/press-release/continuous-protection-for-the-cloud-era-veracode-spotlights-latest-innovations-for-advanced-software-security/

Veracode. (2025, July 30). 2025 GenAI Code Security Report. Veracode Blog. https://www.veracode.com/blog/genai-code-security-report/

Veracode. (2025). 2025 GenAI Code Security Report. Veracode. https://www.veracode.com/resources/analyst-reports/2025-genai-code-security-report/