It starts in the quiet parts of your day. A message that lands clean. It knows your time zone, your dog’s name, the way you write when you’re tired. The profile photo feels both specific and generic, like an ad that almost works. You reply. They reply faster. The conversation doesn’t stall. It never stalls. Not at noon, not at 3:12 a.m. The attention is industrial.

What you’re meeting is not a person. It’s a workflow.

The factory feeling

Researchers have a name for it that is almost too neat: Intimacy Manipulated Fraud Industrialization. In 2023, Wang and Topalli traced how ostensibly legitimate “chat moderator” jobs are organized into shift-based, per-message operations where workers are “paid on a per-text basis to engage in intimate chatting,” and where background files on each target are assembled in real time as multiple workers rotate through a single persona (Wang & Topalli, 2023). One line from their study reads like a blueprint: these scams are “industrialized through enterprise business practices, software platforms, and customer service processes” (Wang & Topalli, 2023).

Industrialization changes the texture. A single scammer can be charming; a factory can be consistent. Scripts don’t forget your sister’s surgery. Handoffs don’t lose tone. The affect is canned but tuned. Replies track your cadence and mirror your slang. If you pause, a “concern check” arrives. If you test, a selfie appears. If you escalate, a voice note answers. Intimacy isn’t improvised anymore. It’s scheduled.

The logistics are unromantic. In one ProPublica investigation into “pig-butchering” operations, an interviewee described teams working from compounds, each person issued multiple phones, a leader pushing lists of numbers, and detailed training manuals that instruct workers to find “pain points” and then map “customer” wealth and vulnerability (Podkul, 2022). The cadence is assembly line. Wrong-number openers. Quick warmth. Frequent transfers to more skilled closers. “Some have gone so far as to draft detailed, psychologically astute training materials,” ProPublica reported, after reviewing more than 200 internal documents (Podkul, 2022).

This isn’t only about curated attention. It’s also about supply chains. Photos are bought in bulk. Short video clips are sourced or fabricated. A single persona can be staffed by a small squad: one worker for text, one for voice notes in a specific accent, one to appear on a short, low-bandwidth call. The effect lands as spontaneous care. The mechanics look like a call center that learned to flirt.

Scale, then spillover

When you stack people and process, you get scale. When you add generative AI, you get volume with personalization. “Fraudsters use AI catfishing to add speed, scale and sophistication to their romance fraud,” said Dr. Jessica Barker in The Independent in April 2024. “Scammers can manipulate many more targets, much quicker and easier, with the aid of AI,” she added, noting how LLMs can generate multiple profiles and carry parallel conversations while AI video tools erode old verification tricks (Coffey, 2024).

That speed doesn’t show up as grammatical perfection. It shows up as availability. The response time that feels like devotion. The thread that never drops. And because the factories don’t care which channel you prefer, you’ll see the same voice across dating apps, Instagram DMs, WhatsApp, and even LinkedIn. Channel choreography is part of the job.

The money tracks the messaging. The Federal Trade Commission’s own numbers say the quiet part loud. In 2022, nearly 70,000 people reported romance scams with reported losses of $1.3 billion, a median hit of $4,400 (Federal Trade Commission, 2023). In 2023, romance-category reports ticked down to 64,003 but still drove $1.14 billion in reported losses, the highest median loss per person among imposter scam types (Federal Trade Commission, 2024). These are only reported figures; shame discounts the ledger.

Industrial operations exploit that underreporting. The more the work resembles customer success, the less victims believe they’ve been attacked by a system. That ambiguity buys time. And time, in this business, is deposits.

What a chat factory does all day

Picture the room. Fluorescent light. A mess of cables. Supervisors walk the aisle. Each worker manages a dozen live chats, half-scripted and half-improvised, all tracked by software that scores engagement. New workers learn the taxonomy of affection, for example love-bombing, scarcity, intermittent reinforcement, and how to avoid “tells” that triggered prior blocks. Background notes compile as the chat flows: favorite coffee, griefs, a child’s middle name. The persona gets heavier. The mask fits better.

Every task has a metric. Minutes to first photo. Depth of self-disclosure by day three. Conversion to a “we” vocabulary. Time on call. Soft asks that test pay rails. Hard asks that move money. Where a hobbyist scammer might go for a single big strike, industrial operators segment outcomes. Some targets are data mines. Some are money movement mules. Some are high-value “whales” for a long, suffocating run. The factory tunes itself to whatever the target will bear.

To keep that throughput high, the industry builds around it: marketplaces selling photo bundles and “handsome guy sets,” recruitment channels trading workers, and translation tools that erase language frictions (Podkul, 2022). In this world, intimacy is a SKU.

The forced-labor shadow

One reason the tone can feel robotic even when the text is smooth: sometimes it is. Not just AI robotic: human robotic. United Nations bodies and investigative reporters have documented scam compounds in Southeast Asia where trafficked workers are coerced into 12-hour chat shifts under threats of violence, blending human trafficking with cyberfraud at scale (Office of the High Commissioner for Human Rights, 2022; Reuters, 2025). The United States Institute of Peace, summarizing a multi-country study group, argues these intertwined networks have evolved into “the most powerful criminal network of the modern era,” with scam compounds centered in Myanmar, Cambodia, and Laos and losses mounting rapidly in North America (United States Institute of Peace, 2024).

This matters for two reasons. First, forced criminality changes the ethics in many factories, the “scammer” on your screen is also a victim. Second, coercion feeds consistency. People under duress hit quotas. People under quotas send messages on time.

The money layer is also industrial

At the retail level, the hook is romantic. At the wholesale level, the system is financial. ProPublica’s June 2025 reporting describes a $44-billion-a-year pig-butchering economy, with Asian syndicates renting U.S. bank accounts via Telegram and laundering dollars into crypto and back again through a churn of shell companies and “motorcades” of accounts (Podkul, 2025). A U.S. study group cited in that reporting calls out how these syndicates exploit weak governance, move quickly across borders, and lean on legitimate-looking platforms to add a sheen of normalcy (United States Institute of Peace, 2024).

The operational consequence is stark. A romance chat that begins with a playlist and a pet name can end with a wire transfer to a real branch in your city, a brokerage login that looks familiar, and a dashboard that seems to show gains. The interface is clean enough to quiet doubt. The back end is a conveyor belt.

AI doesn’t replace the factory. It oils it.

Two truths can be held at once. First, AI makes the scammer’s copy better, faster, and more “you.” Second, AI does not, on its own, create the industrial outcome. Factories do. Barker’s warning is the cleanest bridge between them, speed, scale and sophistication, because it frames AI not as a villain but as a force multiplier that binds to existing processes (Coffey, 2024). When you can spin 100 plausible bios in an afternoon, blend a new photo set into a consistent face, and keep five language threads coherent, you stop worrying about the weak link. You stop losing productivity to boredom.

Emerging research tracks how these dynamics widen the pool of victims. A 2025 USENIX study of pig-butchering victims found losses ranging from hundreds to hundreds of thousands of dollars, with life savings drained and long recovery tails (Oak, 2025). When the input pipeline is industrial and the personalization is synthetic, the outcome is spread - more people, smaller average deposits, more deposits per person. That’s how volume looks when it’s optimized.

And the geography is shifting. UNODC and independent reporting suggest the compound model, born in parts of Southeast Asia, is exporting itself - replicating in new jurisdictions, partnering with other criminal markets, and recruiting across continents (Reuters, 2025). Industrial techniques travel. So does loneliness.

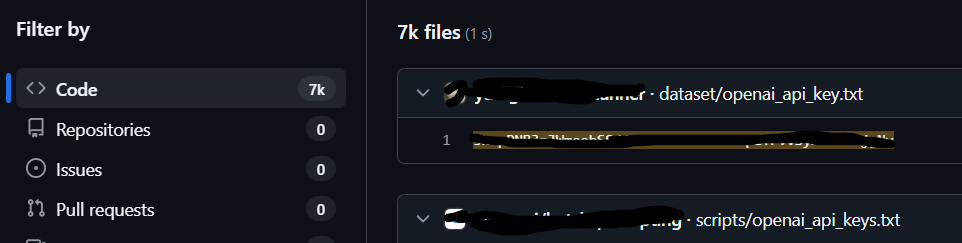

Companies are searchable

Your company blog, your LinkedIn, your conference photos - these reduce the cost of a convincing courtship. The factory loves low acquisition cost. Your public calendar provides windows. Your investor update provides hooks. Your hiring post provides leverage. Chat factories don’t need to find your soul. They need to find your surface area.

The operational choreography is also familiar to you. You already know how a CS team moves from playbook to escalations, how QA files tickets, how sales runs parallel sequences on the same account. When you read about sext-chat moderators and persona handoffs, it just sounds like a perverse CRM. That’s the point. If fraud looks like business, it gets to live where business lives.

The cruelty is ordinary. Not cinematic. A “good morning” at an odd hour that always reads warm. A delayed first ask that respects your workday. A “we” that appears not as pressure but as a comfort. The feeling that someone is tracking your mood. They are. It’s in the notes column.

The industry would prefer we treat this as a parade of sad anecdotes. It isn’t. It’s a system with upstream recruiting, midstream scripting, downstream money movement, and a synthetics layer that lowers cost per outcome. Even its tragedies are patterned.

The replies keep coming at 3:12 a.m. They always will, while the lights stay on.

References

Coffey, H. (2024, April 22). Am I falling for ChatGPT? The dark world of AI catfishing on dating apps. The Independent. https://www.independent.co.uk/life-style/ai-catfishing-dating-apps-chatgpt-b2531460.html

Federal Trade Commission. (2023, February 9). Romance scammers’ favorite lies exposed. https://www.ftc.gov/news-events/data-visualizations/data-spotlight/2023/02/romance-scammers-favorite-lies-exposed

Federal Trade Commission. (2024, February 13). “Love stinks” – when a scammer is involved. https://www.ftc.gov/business-guidance/blog/2024/02/love-stinks-when-scammer-involved

Oak, R. (2025). Unpacking the lifecycle of pig-butchering scams. USENIX SOUPS 2025. https://www.usenix.org/system/files/soups2025-oak-butchering.pdf

Office of the High Commissioner for Human Rights. (2022, August). Online scam operations and trafficking into forced criminality in Southeast Asia. https://bangkok.ohchr.org/news/2022/online-scam-operations-and-trafficking-forced-criminality-southeast-asia

Podkul, C. (2022, September 13). Human trafficking’s newest abuse: Forcing victims into cyberscamming. ProPublica. https://www.propublica.org/article/human-traffickers-force-victims-into-cyberscamming

Podkul, C. (2025, June 25). How foreign scammers use U.S. banks to fleece Americans. ProPublica. https://www.propublica.org/article/pig-butchering-scam-cybercrime-us-banks-money-laundering

Reuters. (2025, April 21). Billion-dollar cyberscam industry spreading globally, UN says. https://www.reuters.com/world/china/cancer-billion-dollar-cyberscam-industry-spreading-globally-un-2025-04-21/

United States Institute of Peace. (2024, May). Transnational crime in Southeast Asia: A growing threat to global peace and security. https://www.usip.org/sites/default/files/2024-05/ssg_transnational-crime-southeast-asia.pdf

Wang, F., & Topalli, V. (2023). The cyber-industrialization of catfishing and romance fraud (SSRN Working Paper). https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4586275