The face looks right. The blink looks right. The voice hits the exact casual warmth you’re used to hearing in an onboarding selfie-check. You approve the account and move on. The account isn’t real. The person isn’t a person. The “liveness” you thought you saw was a rendering - an overlay riding straight into your intake stack. That’s the new comfort crime: synthetic identity, scaled by generative media, slipping past the rituals that made you feel safe.

...native virtual-camera and injection attacks “became the primary threat vector,” spiking 2,665% in 2024; face-swap attacks rose another 300% (iProov, 2025)

Synthetic identity isn’t a stolen you; it’s a stitched nobody. Fragments of real data, name, birth date, Social Security number, get fused with fiction to mint a clean slate that scores, borrows, and disappears. The U.S. Government Accountability Office describes it plainly: the fraud “combines fictitious and real information to fabricate an identity,” and federal systems like eCBSV were built to slow it (U.S. Government Accountability Office [GAO], 2024). That’s the administrative reality. The operational reality is messier. Losses linked to synthetic identities keep climbing, and the accelerant is generative AI. “Synthetic identity fraud continues to expand,” a Boston Fed review noted in April 2025, adding that losses “crossed the $35 billion mark in 2023” (Federal Reserve Bank of Boston, 2025).

The business side feels the pressure in quieter numbers: traditional identity fraud losses in the U.S. reached nearly $23 billion in 2023 (Javelin Strategy & Research, 2024). When researchers talk to fraud leaders about what actually scares them, synthetic identities rank “the number one threat” (Aité-Novarica survey, cited in Plaid, 2024). Seen from the intake queue, it’s obvious why. The old tells are fading. Documents are immaculate. Selfie checks feel animated yet human. And the cadence of the session lands just inside the threshold your system trusts.

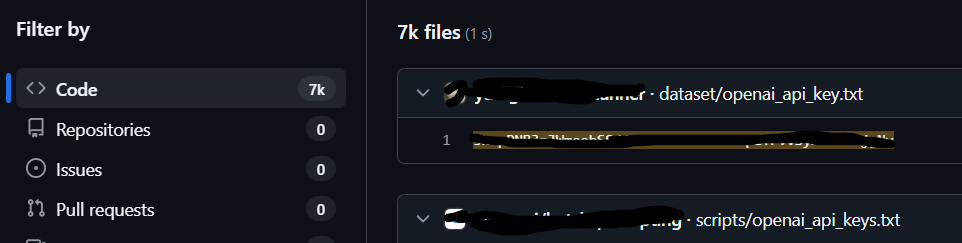

“Deepfake incidents surged tenfold from 2022 to 2023,” one industry dataset reported as the new wave crested into 2024 (Sumsub, 2023). Onfido’s annual review called out a “3,000 percent increase in deepfake attempts” and a fivefold jump in digitally forged identities in 2023 as consumer-grade gen-AI tools matured (Onfido, 2023). That’s the macro signal. The micro signal lives inside the session itself: the moment a selfie stream becomes a transport rather than a lens. iProov’s threat intelligence described the pivot in 2024–2025: native virtual-camera and injection attacks “became the primary threat vector,” spiking 2,665% in 2024; face-swap attacks rose another 300% (iProov, 2025). These are not movie-studio deepfakes; they’re cheap, real-time overlays and device-level stream hijacks engineered to beat liveness checks, often with emulators and metadata manipulation as cover.

The effect is cultural as much as technical. Liveness once felt like a handshake and you believed the motion because motion is hard to fake. Not anymore. Tooling that used to require a GPU nest and scripting now ships as an app with a friendly UI. In 2024, face-swap “injection attacks” jumped 704% half-over-half, often delivered through emulators that mask the virtual camera feeding your verifier (iProov, 2024). The phrase that lands hardest in their research isn’t the percentage. It’s the posture: “what once required significant technical expertise… can now be accomplished by anyone who downloads an app.” This isn’t a hacker archetype. This is a market.

Upstream, the identity itself is assembled with a bureaucrat’s patience. Breach-exposed driver’s license fields, leaked SSNs, harvested addresses, school histories scraped from obits and social posts; layer in a disposable phone, a VPN trail, a warmed email, a thin credit file nurtured through small limits and on-time payments. TransUnion flagged synthetic identity as “the fastest rising type of fraud in 2024” (TransUnion, 2024). A GAO audit spelled out the government’s angle, eCBSV’s purpose is to reduce the prevalence of these composites, but even that program’s cost recovery and adoption hurdles slowed momentum (GAO, 2024). Meanwhile, the private-sector pipeline keeps filling with clean-looking ghosts.

The part that bends the mind is session realism. Synthetic identity was already winning with paper and process; now it wins with presence. Onboarding stacks that rely on “active liveness” meet a choreography engine. The camera feed doesn’t just play back a video; it is the video, injected before your SDK sees it. The emulator spoofs the handset. The metadata matches your allowlist. The face tilts on cue. “Native virtual camera attacks,” iProov wrote, “have become the primary threat vector,” and they’re now discovered inside mainstream app ecosystems, not just shady APK dumps (iProov, 2025). If your mental model for fraud was a desperate one-off, update it. This is industrial QA.

There’s a second rhythm running in parallel: probe, learn, reuse. AU10TIX calls one tactic “Repeaters,” where crews deploy slightly varied synthetic identities, deepfake-enhanced assets used as sentinels, to test which combinations slide past KYC and liveness at which platforms. Each pass yields a new recipe. Each recipe scales (TechRadar Pro, 2025). You feel like you’re approving a person; they’re running an experiment. Traditional point-in-time checks miss the pattern because nothing looks identical twice. That’s the discipline here: treat identities as campaign artifacts, not customers. The fraud shop already does.

The macro curves keep curving. Global providers reported that “the global rate of identity fraud nearly doubled from 2021 to 2023” (Sumsub, 2023). In North America, multiple analyses pegged deepfake-assisted fraud growth in four figures across 2022–2023 (e.g., 1,740% increases cited in security surveys and press syntheses), a directional signal of availability meeting motive (Security.org, 2025; World Economic Forum, 2025). Javelin’s 2024 study framed the baseline: billions in losses, steady scam pressure, and a normalization of account opening attacks that drag into charge-offs (Javelin Strategy & Research, 2024).

Watch how the artifacts evolve. Early tell: photorealistic but static images. Then: image-to-video conversion that yields compliant micro-movements. Then: full real-time swaps steered by a human hand but masked as a human face. By early 2025, iProov was tracking “over 120 different face swap tools,” and its SOC saw native camera–level attacks “peaking at 785 per week” (iProov, 2025). Onfido’s fraud lab announcement in late 2023 telegraphed the same anxiety from another vantage: a need to manufacture deepfakes in-house to anticipate them in the wild (Onfido, 2023).

If you’re tempted to dismiss this as a banking problem, don’t. The ripple is bigger than credit origination. Any funnel that leans on document scans and selfie checks is in scope: marketplaces with seller payouts, telehealth with remote intake, education platforms with ID-bound exams, B2B SaaS with free trials that unlock data pathways. The anti-fraud collaboration platforms looking across verticals see the same composite pattern wearing different uniforms. The Boston Fed piece quoted FiVerity’s $35B marker as a 2023 U.S. loss figure for synthetic identity; that isn’t just card portfolios: it’s new-account fraud wherever “new account” exists (Federal Reserve Bank of Boston, 2025).

The human factor isn’t comfort; it’s credulity engineered as UX. Liveness as a concept taught risk teams to trust motion. Biometrics trained execs to trust face. KYC taught ops to trust paper. When cheap media can imitate all three in a single session, the instinct to trust becomes the attack surface. “This isn’t merely a gradual evolution,” iProov said of the 2024 spike; “it represents a fundamental shift in the threat landscape” (iProov, 2025). There’s no melodrama in that line. Just the flat description of a threshold crossed.

The bureaucracy is moving, slowly. GAO pressed SSA in 2024 for “actions needed to help ensure success” of eCBSV for a system whose legislative point is cutting synthetic identities off at the source (GAO, 2024). SSA, for its part, promised changes in a March 2025 release. The distance between promise and pressure is where the industry lives now (Social Security Administration, 2025). In that distance, synthetic IDs keep maturing. “It looks live,” an iProov campaign bluntly put it. “It isn’t.” The point isn’t that detectors don’t work. The point is that deterrence has to fight a culture of plausible personhood on demand. We’re late to naming that plainly.

One more uncomfortable angle: the time profile of synthetic identity. This isn’t smash-and-grab. It’s slow credit gardening, then harvest. Accounts age. Trust accumulates. Limits rise. When the break finally comes, it registers as risk noise - charge-offs, disputes, “uncollectible.” Javelin’s figures on identity fraud losses don’t isolate synthetics well because the success condition for a good synthetic is to look like everything else (Javelin Strategy & Research, 2024). The synthetic wins by being boring. The deepfake wins by being boringly good enough. Somewhere between those two “borings” is the real story of 2024–2025: a baseline of fakery that no longer announces itself.

There’s a line from the GAO report that reads like a shrug and a warning at once: eCBSV’s purpose is to reduce synthetic identity (GAO, 2024). The purpose of liveness is the same, operationalized in pixels instead of databases. But purpose isn’t physics. Motion is now a commodity. Faces are now a commodity. The session itself is now a commodity. Synthetic identity exploits that fact with patience and tooling. Liveness bypass flatters it with realism. And the rest of us are left staring at a screen that keeps telling us a person is present. The screen might even be right. Just not in the way we think.

References

Aité-Novarica Group. (2024). [Survey findings on synthetic identity threat]. Cited in Plaid. https://plaid.com/resources/fraud/synthetic-identity-fraud/

Federal Reserve Bank of Boston. (2025, April 17). Gen AI is ramping up the threat of synthetic identity fraud. https://www.bostonfed.org/news-and-events/news/2025/04/synthetic-identity-fraud-financial-fraud-expanding-because-of-generative-artificial-intelligence.aspx

iProov. (2024). Threat Intelligence Report 2024 [PDF excerpt: face-swap and injection attack growth]. https://static.poder360.com.br/2025/02/Threat-Intelligence-report-v9.pdf

iProov. (2025, Feb 27). Annual Identity Verification Threat Intelligence Report [Press release]. https://www.iproov.com/press/annual-identity-verification-threat-intelligence-report

iProov. (2025). Threat Intelligence Report 2025: Remote Identity Under Attack [Landing]. https://www.iproov.com/reports/threat-intelligence-report-2025-remote-identity-attack

Javelin Strategy & Research. (2024, April 10). 2024 Identity Fraud Study: Resolving the Shattered Identity Crisis. https://javelinstrategy.com/research/2024-identity-fraud-study-resolving-shattered-identity-crisis

Onfido. (2023, November 15). Onfido releases 2024 Identity Fraud Report and launches fraud lab [Coverage]. Biometric Update. https://www.biometricupdate.com/202311/onfido-releases-2024-identity-fraud-report-and-launches-fraud-lab

Security.org. (2025). Deepfakes: Guide & statistics. https://www.security.org/resources/deepfake-statistics/

Social Security Administration. (2025, March 19). Social Security announces cost reduction and improvements to eCBSV [Press release]. https://www.ssa.gov/news/en/press/releases/2025-03-19.html

Sumsub. (2023, Nov 28). Global deepfake incidents surge tenfold from 2022 to 2023 [Newsroom]. https://sumsub.com/newsroom/sumsub-research-global-deepfake-incidents-surge-tenfold-from-2022-to-2023/

TechRadar Pro. (2025, May 31). Cybercriminals are deploying deepfake “Repeaters” to test defenses (AU10TIX analysis). https://www.techradar.com/pro/security/cybercriminals-are-deploying-deepfake-sentinels-to-test-detection-systems-of-businesses-heres-what-you-need-to-know

TransUnion (via StateScoop). (2024, June 26). Synthetic identity fraud is the fastest rising type of fraud in 2024. https://statescoop.com/synthetic-identity-fraud-data-breaches-transunion-report-2024/

U.S. Government Accountability Office. (2024, Sept 10). Social Security Administration: Actions needed to help ensure success of electronic verification service (GAO-24-106770). https://www.gao.gov/assets/gao-24-106770.pdf

World Economic Forum. (2025, July 7). Detecting dangerous AI is essential in the deepfake era. https://www.weforum.org/stories/2025/07/why-detecting-dangerous-ai-is-key-to-keeping-trust-alive/