Analysis

A collection of 47 posts

Suno AI: Music for Everyone

Discover how Suno.ai allows everyone to make and sell professional music. Legally though, there are questions.

· 4Fsh Team

Anthropic Introduces Claude AI Models to U.S. Government Amid Privacy Concerns

Learn how Anthropic's Claude AI models are being integrated into U.S. government operations amid privacy concerns.

· 4Fsh Team

Understanding AI Jailbreaks

Explore AI jailbreaks, their risks, and effective strategies to mitigate them in this in-depth analysis.

· Sagger Khraishi

Musicians Composing with AI is becoming a thing

Learn how artists are using AI-Generated music in their work

· 4Fsh Team

How Adobe's Firefly Uses AI-Generated Images From Competitors

Explore how Adobe's Firefly AI utilizes rivals' AI-generated images for training and its ethical implications.

· 4Fsh Team

How LLM’s Downplay Intelligence to Match Roles

A research analysis summary about how AI can change it's intelligence to fit it's role.

· 4Fsh Team

AI for Social Good: Addressing Global Challenges

Explore how AI prompts address global issues. Discover innovative applications of AI technology for social impact.

· 4Fsh Team

Smart Factories of Tomorrow: How AI is Reshaping Manufacturing

Discover how AI is driving the evolution of smart factories, enhancing efficiency, and revolutionizing manufacturing processes.

· 4Fsh Team

AI in Job Hunting: 5 Trends Reshaping Recruitment in 2024

Stay informed about 5 key AI trends transforming the recruitment landscape in 2024. Prepare for the future of job hunting.

· 4Fsh Team

Leveraging AI for Personalized Recommendations: Tips and Strategies

Discover how to use AI for personalized recommendations. Boost customer satisfaction and sales with these proven strategies.

· 4Fsh Team

Grimes and AI-Generated Music

Did you know about GrimesAI, Grimes's Music Project? Mechanics, Guidelines, and Revenue Sharing

· 4Fsh Team

Climate Modeling & AI in Environmental Research

AI is upgrading climate modeling with improving accuracy, predicting extreme weather, and guiding environmental action. Updated for 2024

· 4Fsh Team

Voice-Based AI Prompting: The Future of Interaction

Discover how voice-based AI prompts are reshaping user interfaces. Learn implementation strategies for seamless interactions.

· 4Fsh Team

AI in Mental Health: 6 Benefits and 5 Boundaries to Know

Explore the impact of AI on mental health with 6 key benefits and 5 important boundaries to consider for 2024.

· 4Fsh Team

Using AI to find your Unique Value Proposition

Explore how ChatGPT can change your startup's UVP discovery process - from analyzing customer & competitor data to simulating conversations.

· 4Fsh Team

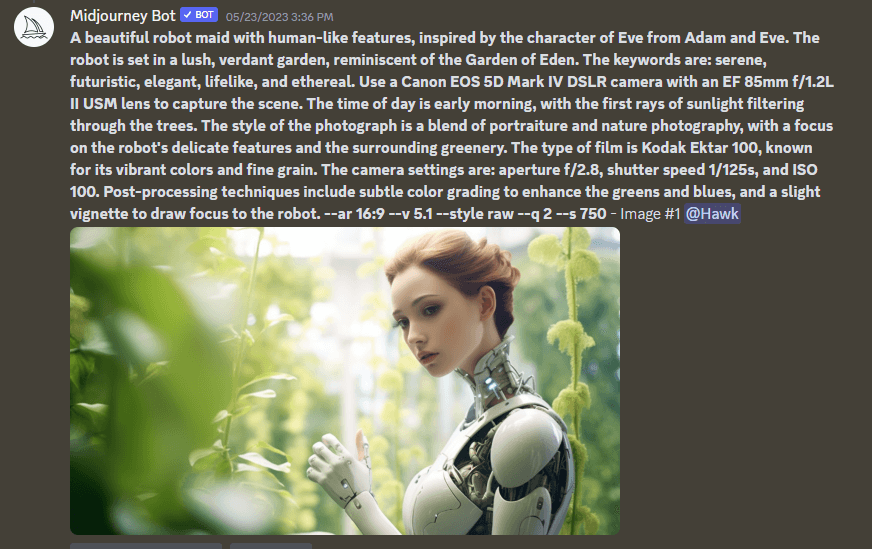

Do not write yet. Confirm you understand? - Guiding AI with Intent

Explore how to use the phrase "Do not write yet. Confirm you understand?" in Chain of Thought (CoT) prompts for improved AI interactions.

· 4Fsh Team

The Fascinating Evolution of AI Prompting

Dive into the fascinating world of AI prompting, its evolution, and how it's shaping our interactions with artificial intelligence.

· Sagger Khraishi