Most small companies do not get hacked at a keyboard. They get social-engineered inside their own trust rituals. Meetings. Calendars. Vendor emails. “Quick calls.” The same lightweight signals that keep a scrappy operation moving are the things a modern scam now imitates. AI did not invent the con. It industrialized it.

Consider what actually changed. Generative models give criminals on-demand copy, voice, and faces. The barrier to entry collapsed. The World Economic Forum put it plainly this year: “It’s freely available to someone with very little technical skill to copy a voice, image or even a video” (World Economic Forum, 2025). That is the core shock to your trust stack. You are no longer evaluating the sender. You are evaluating how well a model performed.

Deepfakes are the blunt edge of this shift. In early 2024, Hong Kong police detailed a case where a finance employee joined a video meeting and saw familiar executives on screen. They were all synthetic. The worker wired roughly $25 million. Later reporting tied the case to the engineering firm Arup (The Guardian, 2024; Hong Kong Free Press, 2024). If you lead a startup or an SMB, translate that scenario to your world. A vendor “CEO” jumps into a deal-closing call. A partner “CFO” asks for a revised banking slip. The video looks right because it is cut from your own public footage.

The technique is migrating upward and downward. Upward, it targets executive identity. WPP’s chief executive described an unsuccessful attempt that used a WhatsApp profile, a Teams meeting, YouTube footage, and a voice clone. “We all need to be vigilant to the techniques that go beyond emails to take advantage of virtual meetings, AI and deepfakes,” he wrote internally (The Guardian, 2024). Ferrari executives reported a similar interception. An alleged deepfake of the CEO on a call where the ruse collapsed when a small verification question broke the script (MIT Sloan Management Review, 2025). Downward, the same toolkit appears in romance-style “catfishing,” fake recruiters, and creator collabs where AI-animated faces carry the conversation long enough to reach “send.” Wired’s mid-2025 reporting is blunt: “Deepfake scams are distorting reality itself” (Wired, 2025).

Email never left the stage. It evolved. Verizon’s Data Breach Investigations Report cautioned that while their 2024 incident set did not yet show a wave of generative-AI-driven compromises, “clear advancements on deepfake-like technology could enhance the quality and effectiveness of various socially engineered attacks… This includes phishing” (Verizon, 2024). Read that as a runway, not a lull. Phishing kits now bundle model prompts, localization, and lure variants. A well-timed prompt produces instant, fluent copy in your market’s dialect. The FBI’s 2024 Internet Crime numbers confirm the macro-trend: reported U.S. cybercrime losses hit $16.6 billion, up 33% year over year (FBI, 2025). That uplift reflects the efficiency of crimeware, not a sudden rise in gullibility.

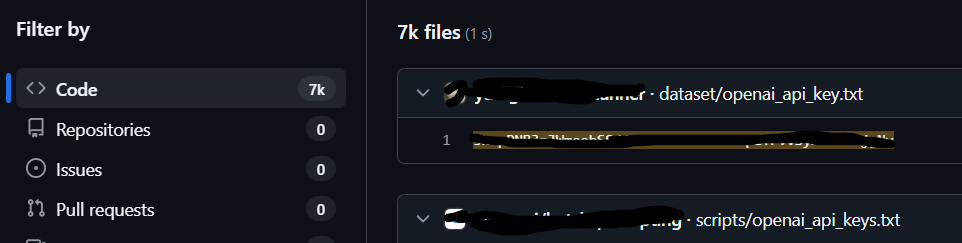

Criminals also sell “AI services” into the hype stream. In 2023, Guardio Labs and other researchers documented fake ChatGPT-branded Chrome extensions that did exactly what hype-drunk users expected: until they quietly stole Facebook session cookies and planted super-admin backdoors. One line from the research reads like a training manual for counterfeit AI: “From zero to ‘hero’ in probably less than 2 minutes” (Guardio Labs, 2023; The Hacker News, 2023). A year later, ESET warned about spoofed tools posing as ChatGPT or Midjourney and delivering malware under that brand halo (ESET, 2024). This is not about a single extension. It is about an underground that now packages “fake AI” as distribution. When your team installs “one clever plugin” to move faster, your ad account, business manager, or browser cookies may be the real product.

Fake AI does not stop at extensions. Fraud operations abuse API wrappers, Telegram bots, or knock-off dashboards that look like developer sandboxes and “helper agents.” The point is not to outperform legitimate models. It is to harvest tokens, steal login flows, and claim persistence on your machines. New trust signals appear (i.e. “powered by X,” “Claude inside,” “works with GPTs”) and scammers imitate them. Sift’s Q2 2025 Digital Trust Index describes the pattern succinctly: “Scammers are leveraging GenAI for everything from deepfakes and voice cloning to personalized phishing and fake AI platforms that steal login credentials” (Sift, 2025).

Voice spoofs matter because they plug into your fastest decisions like approvals and “quick favors.” The FBI warned in May 2025 that if you receive a call or voicemail, even one that sounds like a known person, “do not assume it is authentic” (FBI IC3, 2025). That sentence belongs on every leader’s wall. The voice channel is now just another rendering surface. Your job is to de-romanticize it.

Video-conference vishing took a turn in 2024–2025 as well. Microsoft profiled a group it tracks as Storm-1811 that combined Microsoft Teams lures, voice impersonation, and “Quick Assist” takeover into credential theft and ransomware staging. They also observed adversary-in-the-middle kits such as EvilProxy and NakedPages enabling MFA bypass for familiar brands (Microsoft, 2024). In plain language, the attacker pretends to be IT, asks you to share screen or approve a push, and then automates the rest. You remember the pitch. You do not remember the moment your second factor was proxied.

A different class of threat sits one layer down, inside the “AI agent” story. When your organization connects a model to documents, calendars, or third-party tools, untrusted content can instruct that model to leak or act. This is called prompt injection, and it is now a formal category in the OWASP Top 10 for LLM applications, LLM01 “Prompt Injection”, alongside “Insecure Output Handling” and “Sensitive Information Disclosure” (OWASP, 2025). This is not academic friction. It is the seam that lets a vendor PDF or web result push instructions into your assistant. Research teams have shown that models can be induced to extract hidden prompts or exfiltrate chat details through camouflaged instructions. Wired reported on “Imprompter,” an attack that uses what looks like random characters to make an LLM identify and send personal details out of band, with success rates near 80% in some tested systems (Wired, 2024). Other peer-reviewed work documents prompt-extraction attacks against system prompts used by real services (Zhang et al., 2024; Wang et al., 2024). If your knowledge base sits behind an “ask the AI” interface, you are hosting a conversational perimeter. It behaves like a perimeter. Treat it that way in your mental model, even if this piece will not hand you a playbook.

The takeaway is not that technology is out of control. It is that your company’s everyday trust stack, the calendar invites, SaaS connectors, APIs, voices, and short messages, all has become the battlefield. The trust problem is horizontal. It touches every sector that runs on speed. Creative studios with ad accounts. Clinics with patient portals. Contractors with wire instructions. Nonprofits with donor CRMs. The same patterns appear because the same models feed the lures.

One more uncomfortable point. Much of the “education” your team absorbs arrives via social feeds optimized for outrage. It does not teach the gradient between harmless hype and a one-click compromise. It does not teach how easy it is to lift your voice from a podcast clip and synthesize a “quick” Friday voicemail. It does not show how often “AI-assisted” is just another way to say “faster, cheaper, more iterative.” But the crime economy learned that lesson first.

What does awareness actually look like for a small company today - minus the playbook? It looks like knowing that an avatar on a video call is not a source of truth. It looks like noticing when an “AI” service asks for broad platform permissions that are not obviously needed. It looks like understanding that “agentic assistants” are just software with new failure modes, documented in real security frameworks. It looks like recognizing that, for an attacker, AI’s biggest gift is not infinite intelligence. It is infinite attempts.

The future of the trust stack will be cultural. Vendors will ship better verification cues. Platforms will police their extension stores more tightly. Model providers will keep disrupting abuse networks, as documented in their threat-intel reports, and release new filters (OpenAI, 2025). But none of that rewinds the core dynamic. Synthetics are here. The rituals that kept small teams fast are now the rituals that criminals rehearse. Name that reality out loud. Then design your company’s tempo around it.

References

ESET. (2024, July 29). Beware of fake AI tools masking a very real malware threat. WeLiveSecurity. https://www.welivesecurity.com/en/cybersecurity/beware-fake-ai-tools-masking-very-real-malware-threat/

Federal Bureau of Investigation. (2025, April 23). FBI releases annual Internet Crime Report. https://www.fbi.gov/news/press-releases/fbi-releases-annual-internet-crime-report

Federal Bureau of Investigation, Internet Crime Complaint Center. (2025, May 15). Public service announcement: AI-enabled voice cloning. https://www.ic3.gov/Media/Y2025/PSA250515

Guardio Labs. (2023, March 8). “FakeGPT”: New variant of fake-ChatGPT Chrome extension stealing Facebook ad accounts. https://guard.io/labs/fakegpt-new-variant-of-fake-chatgpt-chrome-extension-stealing-facebook-ad-accounts-with

Hong Kong Free Press. (2024, February 5). Multinational loses HK$200 million to deepfake video scam. https://hongkongfp.com/2024/02/05/multinational-loses-hk200-million-to-deepfake-video-conference-scam-hong-kong-police-say/

Kaspersky. (2024, November 26). Kaspersky uncovers new JaskaGO version on PyPI [Press release]. https://usa.kaspersky.com/about/press-releases/2024_kaspersky-uncovers-new-jaskago-version-on-pypi

Microsoft Threat Intelligence. (2024, May 20). Storm-1811 targets organizations with voice-cloning and Teams-based vishing. Microsoft Security Blog. https://www.microsoft.com/security/blog/2024/05/20/storm-1811-targets-organizations-with-voice-cloning-and-teams-based-vishing/

MIT Sloan Management Review. (2025, February 19). How Ferrari hit the brakes on a deepfake CEO. https://sloanreview.mit.edu/article/how-ferrari-hit-the-brakes-on-a-deepfake-ceo/ MIT Sloan Management Review

OpenAI. (2025, June 1). Disrupting malicious uses of AI: June 2025 (Threat Intelligence Report). https://cdn.openai.com/threat-intelligence-reports/…/disrupting-malicious-uses-of-ai-june-2025.pdf

OWASP Foundation. (2025). OWASP Top 10 for Large Language Model Applications (v1.1). https://owasp.org/www-project-top-10-for-large-language-model-applications/

Sift. (2025). Q2 2025 Digital Trust Index. https://sift.com/index-reports-ai-fraud-q2-2025/

The Guardian. (2024, May 10). CEO of world’s biggest ad firm targeted by deepfake scam. https://www.theguardian.com/technology/article/2024/may/10/ceo-wpp-deepfake-scam

The Guardian. (2024, May 17). UK engineering firm Arup falls victim to £20m deepfake scam. https://www.theguardian.com/technology/article/2024/may/17/uk-engineering-arup-deepfake-scam-hong-kong-ai-video

Verizon. (2024). 2024 Data Breach Investigations Report. https://www.verizon.com/business/resources/reports/dbir/2024/

Wang, J., Liu, Z., Duan, N., & Chen, W. (2024). Prompt extraction benchmark of LLM-integrated applications. Findings of ACL 2024. https://aclanthology.org/2024.findings-acl.791.pdf

Wired. (2024, November 18). This prompt can make an AI chatbot identify and extract personal details from your chats. https://www.wired.com/story/ai-imprompter-malware-llm/

Wired. (2025, June 4). Deepfake scams are distorting reality itself. https://www.wired.com/story/youre-not-ready-for-ai-powered-scams/

World Economic Forum. (2025, February 11). How deepfakes are making fraud easier and more believable. https://www.weforum.org/agenda/2025/02/deepfakes-fraud-prevention/