The faces on the call look familiar. Titles check out. The cadence of the CFO’s voice is right. The small talk lands. Then the “urgent transfer” request hits the agenda, and by the time anyone has doubts, the money is gone. In early 2024, a Hong Kong finance employee joined a multi-person video conference, saw senior colleagues, and sent HK$200 million to five bank accounts. Later, police summarized the moment of realization with a line that belongs in every founder’s mental model: “everyone he saw was fake” (Chen & Magramo, 2024). Arup, the UK engineering firm, later confirmed it was the victim. Its CIO warned that attempts are rising “in number and sophistication,” including deepfakes (Milmo, 2024).

This is what a live deepfake conferencing attack feels like on the receiving end. It is not phishing as you’ve known it. It is social engineering at cinematic resolution, binding visual presence, authority signals, and choreography into a single credible performance. Unlike pre-rendered fake videos, the scam unfolds in real time, responding to you. Realistic lip-sync, voice cloning, and identity blending create a conference room you can’t rely on.

The Arup case is the archetype for small and midsize businesses because it was so ordinary—video call, CFO, familiar faces, wire instructions—and so large at once. According to the Financial Times, the attackers orchestrated multiple transfers over a week in January, and the firm later acknowledged the loss publicly (Financial Times, 2024). You do not need to be a global enterprise to experience this. The workflow—urgent request, recognizable executives, “confidentiality,” whole-room consensus—maps exactly onto how founders and SMB leaders make decisions when speed is a virtue. Deepfake operators know that.

There is a reason the tactic is spreading across channels. Microsoft’s threat team wrote that by late May 2024 it had “observed Storm-1811 using Microsoft Teams…to impersonate IT or help desk personnel,” leading targets to grant remote access and surrender credentials during calls (Microsoft Threat Intelligence, 2024). That’s not video face-swapping per se, but it is the same persuasion physics. A voice, a branded UI, and a meeting context are enough to steer a human into a bad decision. The point for a founder is not which plug-in, model, or technique was used. The point is that your collaboration stack can be turned into a stage.

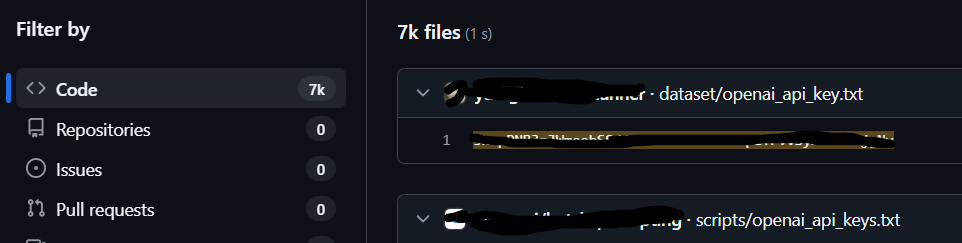

The numbers behind this shift are blunt. Sumsub’s identity-fraud research reported a tenfold increase in detected deepfakes from 2022 to 2023, including a 1,740% surge in North America (Sumsub, 2023). The FBI’s Internet Crime Complaint Center, tracking the broader Business Email Compromise universe, tallied $55.5 billion in global exposed losses across a decade to December 2023, with Hong Kong and the UK frequently serving as intermediary banking hubs (IC3, 2024). By May 2025, the bureau was warning of AI-voiced smishing and vishing impersonating senior US officials, with attackers explicitly “using AI-generated voice messages” to build rapport and pivot targets to malicious links (IC3, 2025). The edges between voice-only vishing, video deepfakes, and conferencing imposture are dissolving.

If you want a human description of how the room feels when reality tilts, read the Arup coverage carefully. The Guardian quotes Arup’s Rob Greig: like many others, the firm is seeing “increasing…sophistication” in attacks, deepfakes included (Milmo, 2024). South China Morning Post adds the baseline choreography: a “confidential transaction” message, a video call populated with cloned colleagues, and fifteen transfers to five accounts (Lo, 2024). The live element matters. It removes the time to cross-check. It compresses decision cycles. It pushes the target into the social pressure of a meeting where dissent feels like insubordination.

Academic work explains why this form is so potent. A 2024 systematic review notes that deepfakes span audio, image, and video, and that multi-modal detection remains brittle outside lab conditions (Abbas & Taeihagh, 2024). Another peer-reviewed survey stresses that standards and evaluation metrics lag real-world fakes, creating a “reliability” gap between benchmarks and what circulates on platforms (Altuncu et al., 2024). In plain terms—what fools a person in a fast-moving meeting often also fools the tools. And as WIRED put it in June 2025, “everything from emails to video calls can’t be trusted” at first glance (Roscoe, 2025).

Healthcare sector analysts saw adjacent tradecraft the same spring. HHS’s HC3 warned of social-engineering campaigns targeting help desks, underscoring how support workflows give adversaries pretexts, cover stories, and control channels (HHS HC3, 2024). Microsoft’s write-up of Storm-1811 completes the picture: email bombing to create urgency, impersonated IT on Teams, then Quick Assist to seize the keyboard (Microsoft Threat Intelligence, 2024). Swap the IT costume for a CFO costume and you are back in the Arup script. The costumes change. The plot points—authority, urgency, privacy, continuity of channel—stay fixed.

Why this hits founders and SMB executives first. Startups move in chat and meet in video. They run lean finance teams. They centralize decision-rights in a handful of people who are also busy. They also hire distributed, where many teammates have never met in person. Live deepfake conferencing attacks exploit that operating system. The attacker does not need to breach your network. They need a minute of public audio from your exec, a clean LinkedIn org chart, and the ability to assemble a believable ensemble in a familiar room. The more your firm prizes speed, the more “real-time” persuasion works.

The psychological mechanics are not subtle but they are strong. A live room generates social proof. A face generates recognition. A title lowers friction. A chorus nudges you into “default-comply.” The Arup call weaponized that stack. In Baron Chan’s summary, the presence itself was fake, yet all the human signals that tell us “this is safe” were present (Chen & Magramo, 2024). That is why these attacks feel qualitatively different from a suspicious email. You are not scanning a message for typos. You are disagreeing with your boss’s face.

From a sector standpoint, no industry is immune. Finance and crypto remain high-loss targets. Professional services, media, and healthcare feature heavily in identity fraud trendlines (Sumsub, 2023). Public-sector warnings about AI-voiced impersonation show the same pattern migrating into political and civic contexts—another reminder that the underlying capability travels well across domains (IC3, 2025). The connective tissue is interpersonal trust. Wherever trust collapses into video, voice, and a calendar invite, live deepfake conferencing belongs on the risk register.

This is not a story about one spectacular case. It is a story about normal operations under adversarial pressure. Consider one more detail from Microsoft: the June 2024 “update” mattered because the adversary iterated into the meeting layer. Teams messages and calls, impersonated tenants named “Help Desk,” then remote-access coercion (Microsoft Threat Intelligence, 2024). If you sketch that to your own situation, the moral is uncomfortable. The attacker will keep moving toward whatever you treat as a proof-of-reality. Today that is a live call.

You will see more of these stories because they work. And because they work, they will get cheaper and more modular. Tooling already compresses the gap between a public speech clip and a plausible voice. Surveys show detection models stumble when tested on fresh, in-the-wild fakes rather than curated academic sets (Altuncu et al., 2024; Abbas & Taeihagh, 2024). Journalism is catching up, but the directional signal is clear. As WIRED summed up, the trust surface of everyday digital life is being distorted at the seams where humans usually feel safest—“from emails to video calls” (Roscoe, 2025).

If you lead a startup or an SMB, put a mental sticky note on that Hong Kong quote and bury it in your muscle memory. In a multi-person video conference, everyone you see might be fake. The room can be an instrument. The instrument can be tuned to your org chart. And the only thing standing between a normal Tuesday and a seven-figure loss is whether the performance convinces someone whose job is to move money.

References

Abbas, F., & Taeihagh, A. (2024). Unmasking deepfakes: A systematic review of deepfake detection and generation techniques using artificial intelligence. Expert Systems with Applications, 252(Part B), 124260. https://www.sciencedirect.com/science/article/pii/S0957417424011266

Altuncu, E., Franqueira, V. N. L., & Li, S. (2024). Deepfake: Definitions, performance metrics and standards, datasets, and a meta-review. Frontiers in Big Data, 7, 1400024. https://www.frontiersin.org/journals/big-data/articles/10.3389/fdata.2024.1400024/full

Chen, H., & Magramo, K. (2024, February 4). Finance worker pays out $25 million after video call with deepfake ‘chief financial officer’. CNN (syndicated by WRAL). https://www.wral.com/story/finance-worker-pays-out-us-25m-after-video-call-with-deepfake-chief-financial-officer/21278816/

Financial Times. (2024, May 17). Arup lost $25mn in Hong Kong deepfake video conference scam. https://www.ft.com/content/b977e8d4-664c-4ae4-8a8e-eb93bdf785ea

HHS Health Sector Cybersecurity Coordination Center. (2024, April 3). Social engineering attacks targeting IT help desks in the health sector [Sector Alert]. https://www.hhs.gov/sites/default/files/help-desk-social-engineering-sector-alert-tlpclear.pdf

Internet Crime Complaint Center (IC3). (2024, September 11). Business Email Compromise: The $55 Billion Scam [Public Service Announcement I-091124]. https://www.ic3.gov/PSA/2024/PSA240911

Internet Crime Complaint Center (IC3). (2025, May 15). Senior US Officials Impersonated in Malicious Messaging Campaign [Public Service Announcement I-051525]. https://www.ic3.gov/PSA/2025/PSA250515

Lo, H.-y. (2024, May 17). UK multinational Arup confirmed as victim of HK$200 million deepfake scam. South China Morning Post. https://www.scmp.com/news/hong-kong/law-and-crime/article/3263151/uk-multinational-arup-confirmed-victim-hk200-million-deepfake-scam-used-digital-version-cfo-dupe

Microsoft Threat Intelligence. (2024, May 15). Threat actors misusing Quick Assist in social engineering attacks leading to ransomware (June 2024 update). Microsoft Security Blog. https://www.microsoft.com/en-us/security/blog/2024/05/15/threat-actors-misusing-quick-assist-in-social-engineering-attacks-leading-to-ransomware/

Milmo, D. (2024, May 17). UK engineering firm Arup falls victim to £20m deepfake scam. The Guardian. https://www.theguardian.com/technology/article/2024/may/17/uk-engineering-arup-deepfake-scam-hong-kong-ai-video

Roscoe, J. (2025, June 4). Deepfake scams are distorting reality itself. WIRED. https://www.wired.com/story/youre-not-ready-for-ai-powered-scams/

Sumsub. (2023, November 28). Global deepfake incidents surge tenfold from 2022 to 2023 [Newsroom]. https://sumsub.com/newsroom/sumsub-research-global-deepfake-incidents-surge-tenfold-from-2022-to-2023/

The Guardian. (2024, February 5). Company worker in Hong Kong pays out £20m in deepfake video call scam. The Guardian. https://www.theguardian.com/world/2024/feb/05/hong-kong-company-deepfake-video-conference-call-scam