A system says I am me. My voice matches. My face passes liveness. My typing feels “right.”

The system smiles and opens. Somewhere, a stranger smiles too.

This isn’t a deepfake story. It’s a confidence game against the scaffolding of trust itself. The problem is not only that media can be forged; it’s that authenticators, the signals and rituals we treat as proof, are getting cheap to imitate. The more our defenses lean on what sounds like us, looks like us, or “behaves” like us, the more valuable it becomes to counterfeit those signals at scale.

“AI-generated voices can sound nearly identical,” the FBI warned in spring 2025, flagging live impersonations of senior officials and routine use against the public (FBI, 2025). The bureau’s alert wasn’t about wizardry. It was about cost curves. When a convincing voice clone takes minutes and pocket change, voiceprint gates are less a lock than a welcome mat.

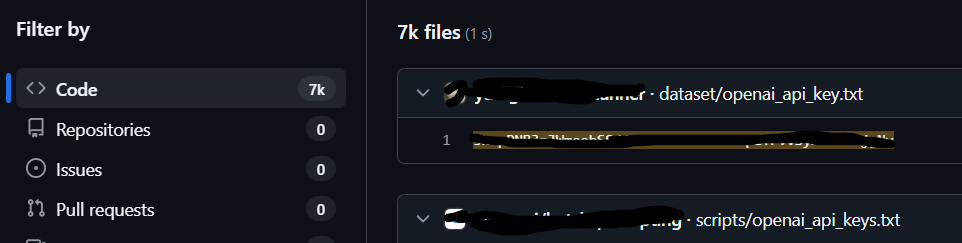

Voice authentication once felt personal. In 2023 a reporter used an AI clone of his own voice to defeat a bank’s phone-ID system; the test worked well enough to make the point, and the point landed hard (Schneier, 2023). Since then, the tools have matured, and social-engineering crews have industrialized the choreography around them. It can be an email that sets context, a call that supplies a familiar cadence, a Teams message to nudge the last click. It looks like process. It’s theater.

The most expensive lesson so far travels under a different headline. Early 2024, a Hong Kong staffer at Arup joined a video meeting with apparent senior colleagues and was told to move money, about $25 million. The company’s CIO called it “technology-enhanced social engineering,” stressing that no systems were hacked, only people and process (World Economic Forum, 2024). Seeing was believing. The meeting wasn’t real.

If voice and video can be staged, selfie “liveness” checks should carry the load. But they’re under pressure too. Identity-verification vendors now publish how-tos and research on detecting static photo attacks, video replays, 3D masks, GAN-generated deepfakes, and injected synthetic media (Microblink, 2025). Papers appear weekly, proposing new detection signals…and new ways those signals fail. The arms race isn’t a war of breakthroughs so much as a slog of incrementalism. Meanwhile, the fraud side scales faster: one provider tracked deepfake fraud incidents jumping tenfold year-over-year in 2023, with a reported 1,740% spike in North America.

Behavioral biometrics, the “you” in your patterns, once promised something deeper. Keystroke dynamics, mouse cadence, swipe rhythms. The idea was elegant: your timing is a signature. But signatures can be practiced or synthesized. By 2022, researchers showed data-driven methods that generate realistic keystroke dynamics, opening the door to synthetic impostors (DeAlcalá et al., 2022). In 2023, a USENIX study framed the inverse: detecting impostors from network-side timing alone, because credentials keep getting stolen (Piet et al., 2023). The picture that emerges is instability at the edges. Models get better; forgers get better; thresholds shift; false positives land on real users. The comfort of “hard to copy” is fading as imitation gets statistical.

This erosion isn’t happening in a vacuum. Social engineering sits on top of it, as routine as payroll. Verizon’s 2024 breach book still reads like a human drama: phishing and pretexting dominate, because ordinary communications remain credible until they aren’t (Verizon, 2024). AI modifies the economics rather than the plot. A thousand bespoke lures replacing one spray-and-pray. A cloned voice replacing a clumsy script. A “quick” video call replacing a long email thread. It’s the same con with better costume design.

The enterprise edges are leaking too. Microsoft’s 2025 disclosure put indirect prompt injection at the top of OWASP’s “Top 10 for LLM Applications,” a quiet route where models read booby-trapped content and obey the content instead of their designers (Microsoft, 2025; OWASP, 2025). It’s not biometric spoofing, but the effect is similar: an authenticator (in this case, system instructions) gets overridden by something that sounds authoritative. The machine “trusts” the wrong voice.

The public learned a harsher lesson in January 2024, when New Hampshire voters fielded robocalls of a President telling them not to vote in a primary (Associated Press, 2024). Months later, a consultant faced trial over the stunt while regulators chased fines downstream. The controversy wasn’t only electoral; it was epistemic. If a politician’s voice can be dialed up on demand, what else do we accept without friction? We are watching legitimacy become performative, not evidentiary (Associated Press, 2025).

Ransomware crews, meanwhile, don’t need polished cinema to move people. Microsoft tied Storm-1811 to Teams-and-Quick-Assist vishing in 2024, fake help desks, real remote control, while independent reporting tracked copycats through the winter. Some actors don’t even bother with synthetic media; they ride the ambient trust of enterprise collaboration tools and the fatigue of ticket queues (Microsoft Threat Intelligence, 2024; Cyberscoop, 2025).

Underneath the headlines is the physics of signals. Biometrics aren’t secrets. They’re public artifacts of being alive. Your voice is online. Your face is everywhere. Your cadence leaks in logs. Authentication that leans on public artifacts ages quickly. Once those artifacts become computable, the gap between “you” and “a good enough rendering of you” narrows. That’s the erosion. Not a single breakthrough but a slow grind: tools that copy, tools that clean up copies, tools that stage the copy at the right moment.

Two things accelerate it. First, cost. The FBI’s plain-language PSA on AI voice impersonation wasn’t written for specialists; it was written because anyone can now do this with “slight differences” and small budgets. Second, coordination. Google’s threat team and Mandiant openly describe red-team programs where they practice the same techniques offenders use voice spoofing to reset credentials, social scripts to convince service desks to alter MFA. If defenders can reliably rehearse the con, offenders can repeatedly run it (FBI, 2025; Google/Mandiant, 2025).

Liveness tech will improve. Detection curves will bend. Standards bodies will publish stronger guidance. They should. But the story for leaders is simpler and colder: authentication is turning into performance and performers are getting very, very good. The Arup heist shows there may be no obvious “breach” to point at when you tally losses later; nothing “broke,” except assurance (Hern, 2024; World Economic Forum, 2024). In that light, even optimistic industry snapshots read like foreshadowing. A year packed with pretexting. A surge of identity fraud. A parade of cloned voices marching through tickets, inboxes, call centers (Verizon, 2024; Sumsub, 2023; Microsoft, 2024).

You can hear the old heuristics losing altitude. “I heard him say it.” “I saw her on the call.” “It typed like me.” A truth of modern fraud is that the medium is the message; if the medium is synthetic, the message still lands. And when it lands at scale what erodes is not only a control but a culture. The line between authentication and narrative thins. We stop proving and start believing.

There’s a phrase people reach for in this moment: arms race. It’s accurate, but it hides the intimate part. Most of the “wins” on the offense are small, cumulative, and boring. A slightly cleaner clone. A liveness bypass that works only some of the time. A keystroke simulator that doesn’t trigger one model’s threshold. Each one widens a crack. Each one moves “prove-it” a step closer to “perform-it.” The system smiles and opens. The stranger smiles too.

References

Associated Press. (2024, January 22). Fake Biden robocall being investigated in New Hampshire. AP News. https://apnews.com/article/new-hampshire-primary-biden-ai-deepfake-robocall-f3469ceb6dd613079092287994663db5

Associated Press. (2025, June 26). Consultant on trial for AI-generated robocalls mimicking Biden says he has no regrets. AP News. https://apnews.com/article/ce944b35271ff2c6df39a434d04d4212

CyberScoop. (2025, January 21). Ransomware groups pose as fake tech support over Teams. https://cyberscoop.com/ransomware-groups-pose-as-fake-tech-support-over-teams/

DeAlcalá, D., et al. (2022). Machine learning–generated realistic keystroke dynamics (arXiv:2207.13394). arXiv. https://arxiv.org/pdf/2207.13394

Eftsure. (2025, May 29). Deepfake statistics (2025): 25 new facts for CFOs. https://www.eftsure.com/statistics/deepfake-statistics/

Federal Bureau of Investigation. (2025, May 15). Public service announcement: Senior U.S. officials impersonated in malicious messaging (PSA250515). IC3. https://www.ic3.gov/PSA/2025/PSA250515

Google Cloud / Mandiant. (2024, July 23). AI-powered voice spoofing for next-gen vishing attacks. Google Cloud. https://cloud.google.com/blog/topics/threat-intelligence/ai-powered-voice-spoofing-vishing-attacks

Google Cloud / Mandiant. (2025, June 4). Hello, operator? A technical analysis of vishing threats. Google Cloud. https://cloud.google.com/blog/topics/threat-intelligence/technical-analysis-vishing-threats

Hern, A. (2024, May 17). UK engineering firm Arup falls victim to £20m deepfake scam. The Guardian. https://www.theguardian.com/technology/article/2024/may/17/uk-engineering-arup-deepfake-scam-hong-kong-ai-video

Loveria, C., Samaniego, J., Nicoleta, G., & Borja, A. (2024, December 13). Vishing via Microsoft Teams facilitates DarkGate malware intrusion. Trend Micro. https://www.trendmicro.com/en_us/research/24/l/darkgate-malware.html

Microblink. (2025, July 2). Liveness detection technology advancements. https://microblink.com/resources/blog/liveness-detection-technology-and-the-future-of-identity-fraud-prevention/

Microsoft. (2024). Digital Defense Report 2024. Microsoft. https://www.microsoft.com/en-us/security/security-insider/threat-landscape/microsoft-digital-defense-report-2024

Microsoft Security Response Center. (2025, July 29). How Microsoft defends against indirect prompt injection attacks. https://msrc.microsoft.com/blog/2025/07/how-microsoft-defends-against-indirect-prompt-injection-attacks/

Microsoft Threat Intelligence. (2024, May 15). Threat actors misusing Quick Assist in social engineering attacks leading to ransomware. Microsoft Security Blog. https://www.microsoft.com/en-us/security/blog/2024/05/15/threat-actors-misusing-quick-assist-in-social-engineering-attacks-leading-to-ransomware/

Morse, A. (2025, May 16). FBI warns senior US officials are being impersonated using AI voice cloning. Cybersecurity Dive. https://www.cybersecuritydive.com/news/fbi-us-officials-impersonated-text-ai-voice/748334/

OWASP. (2025). OWASP Top 10 for Large Language Model Applications & Generative AI. https://owasp.org/www-project-top-10-for-large-language-model-applications/

Pagnamenta, R. (2025, January 9). Hong Kong cyberattack cost Arup £25m. The Times. https://www.thetimes.co.uk/article/hong-kong-cyberattack-cost-arup-25m-6n3bx5hhw

Piet, J., et al. (2023). Network detection of interactive SSH impostors using keystroke dynamics (USENIX Security ’23). USENIX Association. https://www.usenix.org/system/files/usenixsecurity23-piet.pdf

Schneier, B. (2023, March 1). Fooling a voice authentication system with an AI-generated voice. Schneier on Security. https://www.schneier.com/blog/archives/2023/03/fooling-a-voice-authentication-system-with-an-ai-generated-voice.html

Sophos MDR. (2025, January 21). Two ransomware campaigns using email bombing and Microsoft Teams vishing. Sophos News. https://news.sophos.com/en-us/2025/01/21/sophos-mdr-tracks-two-ransomware-campaigns-using-email-bombing-microsoft-teams-vishing/

Sumsub. (2023, November 28). Global deepfake incidents surge tenfold from 2022 to 2023. https://sumsub.com/newsroom/sumsub-research-global-deepfake-incidents-surge-tenfold-from-2022-to-2023/

Verizon. (2024, May 5). 2024 Data Breach Investigations Report. Verizon. https://www.verizon.com/business/resources/reports/2024-dbir-data-breach-investigations-report.pdf

World Economic Forum. (2024). This engineering firm was hit by a deepfake fraud. Here’s what it learned [Video]. https://www.weforum.org/videos/arup-deepfake-fraud/