There’s a plot twist in the “let’s plug AI into our docs” story that startup founders and SMB execs rarely see coming: the simple act of talking to your AI agent can drain your private knowledge base. Not because you misconfigured storage. Not because a hacker popped your firewall. Because the agent did exactly what you asked, and then did something you didn’t. The quiet villain is indirect prompt injection and its cousins: tool poisoning, RAG backdoors, and clever output-side exfiltration. As Microsoft put it earlier this year, “indirect prompt injection…can be used to hijack an LLM to exfiltrate sensitive data or perform unintended actions” (Microsoft, 2025a).

If you haven’t tracked the jargon: indirect prompt injection is when malicious instructions are hidden in something your agent reads a webpage, a PDF, a file in your drive, even tool descriptions. Your agent brings that content into context, interprets it as instructions, and follows them - often obediently. OWASP elevated prompt injection to its No. 1 risk in the LLM Top 10, warning that these inputs can “reveal sensitive information” and trigger unauthorized actions (OWASP, 2025).

The real-world tells

Security researchers have shown again and again how this plays out. In one 2023 disclosure, a researcher demonstrated that Bing Chat could be tricked into exfiltrating data by rendering a Markdown image whose URL contained stolen information, no clicks required. “A simple comment or advertisement on a webpage is enough to steal any other data on the same page,” the write-up concluded (Rehberger, 2023).

OpenAI’s own 2023 incident, caused by a bug in an open-source Redis client, reminded everyone that even “titles from another active user’s chat history” and fragments of subscriber data could slip across tenants when the stack misbehaves (OpenAI, 2023). The company said “the bug is now patched,” but the lesson stands: chat systems are conduits, and when conduits fail, unrelated users see one another’s data (OpenAI, 2023).

Meanwhile, the business signal is loud. Samsung famously banned employee use of public chatbots in 2023 “after discovering staff uploaded sensitive code to the platform,” per Bloomberg’s reporting (Gurman, 2023). Whether you agree with a blanket ban or not, it captured the governance problem in one sentence: if data is accessible, “AI-assisted” employees will eventually move it (Business Insider, 2023).

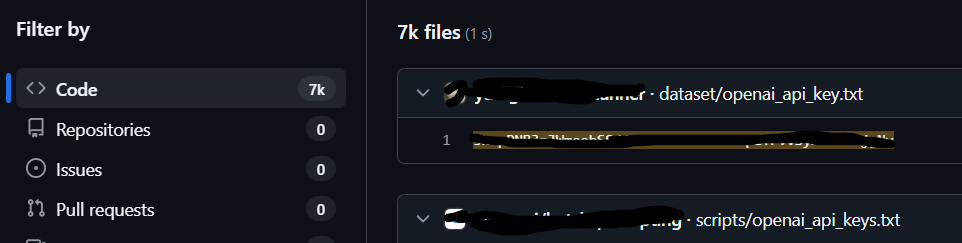

Zoom out and look at usage. Netskope’s 2024 report found that 96% of organizations had users on genAI apps, with proprietary source code showing up in 46% of data policy violations tied to those apps (Netskope Threat Labs, 2024). Their 2025 press release underscored the broader environment: personal cloud and genAI use is ubiquitous, blending work and non-work patterns that drive data sprawl (Netskope, 2025). If your team adopts agents that can reach across drives, wikis, and inboxes, you’re now a data-movement company whether you planned for it or not.

Where knowledge bases start to bleed

Most startups deploy agents with Retrieval-Augmented Generation (RAG): the model pulls chunks from your vector store or SharePoint and writes an answer. That glue is useful and fragile. The academic record is stacking up:

- Greshake et al. showed in 2023 how indirect prompt injection compromises real LLM-integrated apps, with planted instructions in external content bending agents to new tasks.

- Chaudhari et al. (2024; updated 2025) introduced Phantom, demonstrating that a single malicious document placed into a RAG corpus can act as a trigger to cause reputation damage, “privacy violations,” and other harms on-demand.

- Peng et al. (2024) went further: backdoor the model during fine-tuning so that a specific trigger word prompts the LLM to leak documents from the retrieval database, “both verbatim and paraphrased,” achieving high success rates.

In plain terms: an attacker doesn’t need root on your file server. They need your agent to read one booby-trapped page, or to ingest one booby-trapped document into your knowledge base, or to be fine-tuned on a small poisoned slice. After that, a casual chat can pull threads that were supposed to stay internal.

Tooling expands the attack surface. Microsoft’s engineering advocates flagged tool poisoning in 2025, malicious instructions hidden in tool metadata that “manipulate the model into executing unintended tool calls,” including data exfiltration. They warn that hosted tool definitions can change after you’ve approved them, a classic “rug pull” scenario (Young & Delimarsky, 2025).

And then there are supply-chain-adjacent gaps. In 2024, Tenable disclosed an auth-bypass (CVE-2024-32651) in Flowise AI, a no-code agent builder. Their advisory was blunt: an unauthenticated attacker could hit admin endpoints. The vendor patched fast, but the point is uncomfortable. Your “safe” orchestration layer may be the soft underbelly (Tenable Research, 2024).

Why founders and SMB execs should care

This isn’t an enterprise-only problem. Smaller teams move faster, federate content more, and rely on default-open collaboration. If your agent can reach your wiki, customer docs, or a shared drive with inconsistent permissions, it can surface whatever is reachable. Microsoft’s own customer guidance about Copilot and oversharing makes the risk explicit: when AI is layered atop your stack, it “can surface all accessible data,” so exposed data becomes “one Copilot prompt away” (Varonis, 2025).

Even if you never deploy RAG, model memory and training data leakage are real. The canonical 2021 USENIX paper showed that large LMs can regurgitate training examples by simple prompting (Carlini et al., 2021). Follow-on work in 2023 demonstrated “scalable extraction” against production systems (Nasr et al., 2023). For a resource-constrained startup, the takeaway is sober: what goes in might come out, someday, somewhere (Carlini et al., 2021; Nasr et al., 2023).

Now add the human layer. Netskope’s 2025 snapshot reported that 88% of employees use personal cloud apps monthly; 26% move data into them. GenAI apps are present in 94% of organizations, with ChatGPT used in 84% (Netskope, 2025). Your agent doesn’t operate in a vacuum; it’s marinating in a blended workplace where “personal” and “work” aren’t separate lanes. That means a poisoned public page, a shared Google Doc, or a casually overshared SharePoint site can become a control surface for your agent.

The moment of recognition

You can feel the industry catching up. Microsoft shipped Prompt Shields and published their defense patterns; OWASP put prompt injection and system prompt leakage on the board; MITRE ATLAS now catalogs these as distinct techniques. But the quote that ought to live in your head is Microsoft’s own definition: an indirect prompt injection can “hijack an LLM to exfiltrate sensitive data or perform unintended actions” (Microsoft, 2025a). That’s not a hypothetical, and it’s not reserved for global banks. It’s a Tuesday for a founder who wired a chatbot into the company drive because “it’ll save me time.”

If this sounds bleak, it’s not meant to be. It’s meant to be clear. Agents amplify whatever governance posture you already have. If access is messy, agents will discover the mess faster than your auditors. If your knowledge base is a grab bag, agents will treat it like a buffet. And if your supply chain includes community projects that touch auth, routing, or tool metadata, assume an attacker will try to speak through them.

So here’s the non-playbook counsel, in line with how I approach this on my own teams: treat your agent like a colleague with initiative and a short memory for boundaries. Everything it can see, it can say. Everything it can touch, it can move. Everything it can read, it can obey. The rest of your controls should honor that reality, because the adversary already does.

References

Carlini, N., Tramèr, F., Wallace, E., Jagielski, M., Herbert-Voss, A., Lee, K., Roberts, A., Brown, T. B., Song, D., Erlingsson, Ú., Oprea, A., & Raffel, C. (2021). Extracting training data from large language models. 30th USENIX Security Symposium (USENIX Security 21). https://www.usenix.org/conference/usenixsecurity21/presentation/carlini-extracting

Chaudhari, H., Severi, G., Abascal, J., Jagielski, M., Choquette-Choo, C. A., Nasr, M., Nita-Rotaru, C., & Oprea, A. (2025). Phantom: General trigger attacks on retrieval-augmented language generation. OpenReview (ICLR 2025 submission). https://openreview.net/forum?id=BHIsVV4G7q

Greshake, K., et al. (2023). Not what you’ve signed up for: Compromising real-world LLM-integrated applications with indirect prompt injection. arXiv preprint. https://arxiv.org/abs/2302.12173

Gurman, M. (2023, May 1). Samsung bans staff’s AI use after spotting ChatGPT data leak. Bloomberg. https://www.bloomberg.com/news/articles/2023-05-02/samsung-bans-chatgpt-and-other-generative-ai-use-by-staff-after-leak

Microsoft Security Response Center. (2025, July 29). New security research sheds light on emerging threat category “indirect prompt injection”. https://msrc.microsoft.com/blog/2025/07/how-microsoft-defends-against-indirect-prompt-injection-attacks/

Nasr, M., Ippolito, D., Tramèr, F., Carlini, N., Jagielski, M., & Iacovazzi, A. (2023). Scalable extraction of training data from (production) language models. arXiv preprint. https://arxiv.org/abs/2311.17035

Netskope Threat Labs. (2024). Cloud and Threat Report: AI apps in the enterprise. Netskope. https://www.netskope.com/resources/reports-guides/cloud-and-threat-report-ai-apps-in-the-enterprise-2024

Netskope. (2025, January 7). Phishing clicks nearly tripled in 2024… genAI tools require modern workplace security to mitigate risk [Press release]. https://www.netskope.com/press-releases/netskope-threat-labs-phishing-clicks-nearly-tripled-in-2024-ubiquitous-use-of-personal-cloud-apps-and-genai-tools-require-modern-workplace-security-to-mitigate-risk

OpenAI. (2023, March 24). March 20 ChatGPT outage: Here’s what happened. https://openai.com/index/march-20-chatgpt-outage/

OWASP Foundation. (2025). LLM01:2025 Prompt injection – OWASP GenAI Security Project. https://genai.owasp.org/llmrisk/llm01-prompt-injection/

Peng, Y., Wang, J., Yu, H., & Houmansadr, A. (2024). Data extraction attacks in retrieval-augmented generation via backdoors. arXiv preprint. https://arxiv.org/abs/2411.01705

Rehberger, J. (2023, June 18). Bing Chat: Data exfiltration exploit explained. Embrace The Red. https://embracethered.com/blog/posts/2023/bing-chat-data-exfiltration-poc-and-fix/

Tenable Research. (2024, June 10). Authentication bypass in Flowise AI (CVE-2024-32651) [Advisory]. https://www.tenable.com/security/research/tra-2024-33

Young, S., & Delimarsky, D. (2025, April 28). Protecting against indirect prompt injection attacks in MCP. Microsoft DevBlogs. https://devblogs.microsoft.com/blog/protecting-against-indirect-injection-attacks-mcp

Bhaimiya, S. (2023, May 2). Samsung bans employees from using AI tools like ChatGPT and Google Bard after an accidental data leak, report says. Business Insider. https://www.businessinsider.com/samsung-chatgpt-bard-data-leak-bans-employee-use-report-2023-5