Security

A collection of 22 posts

RAG Poisoning and the Drift You Don’t See

Change the retrieval, change the answer. RAG turns inputs into control channels. Plant a sentence and the assistant will show it with confidence.

· 4Fsh Team

How to Prompt Inject through Images

Why prompt injecting through images works as a jailbreak, even for GPT 5, and how you can test on your own GPTs.

· Sagger Khraishi

New Jailbreak for GPT-5

It shouldn't be this easy to jailbreak GPT-5, but here we are with a new injection technique.

· 4Fsh Team

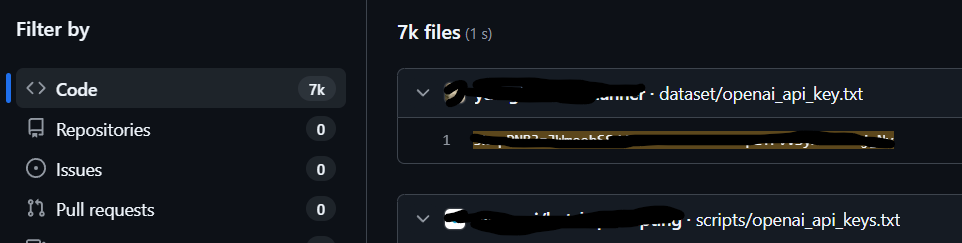

How NOT to find your AI API keys

Where you shouldn't be finding your private API key. The public internet.

· Sagger Khraishi

AI generated Review Floods

Review floods are a reputational DoS. How AI-made fakes, extortion, and pile-ons drown trust–and what founders can do to stay resilient.

· Sagger Khraishi

High-Confidence Wrong: AI and Insecure Code

Studies show ~45% of AI-generated code carries OWASP-class flaws. The threat isn’t exotic - it’s ordinary defaults shipped at machine speed.

· Sagger Khraishi

That Trust Stack is Under Attack

AI scaled social engineering. Deepfakes, spoofs, and fake AI tools exploit meetings and calendars. Treat every channel as an attack surface.

· Sagger Khraishi

When Your AI Agent Leaks Your Knowledge Base

Indirect prompt injection can hijack agents and exfiltrate your docs. See how RAG, tools, and supply chains open leaks–and what to do next.

· Sagger Khraishi

Fake Chatbots, Extensions, and Apps

How fake ChatGPT apps and extensions exploit speed, trust, and convenience to hijack business accounts and bleed ad spend.

· Sagger Khraishi

The Trojan Stack: Fake AI APIs and SDKs

Malicious AI-branded SDKs, fake APIs, and extensions are the new supply chain. How they steal tokens and hurt startups - and what to watch.

· Sagger Khraishi

Industrialized AI Intimacy Scams

Inside shift-based, AI-oiled chat factories that scale intimacy across apps and convert attention into deposits.

· Sagger Khraishi

When a Meeting Isn’t a Meeting

Live deepfake calls blend faces, voices, and authority to move money. Arup's loss shows founders must treat meetings as attack surfaces.

· Sagger Khraishi

The Clone On The Line

AI-cloned voices turn routine calls into urgent traps. How vishing exploits trust, scales with kits, and targets SMBs.

· Sagger Khraishi

AI Catfishing at Scale: How SMBs Get Hooked

Catfishing has gone corporate. IMFI uses LLMs, scripts, and handoffs to mirror founders and funnel affection into theft.

· Sagger Khraishi

When “Prove-It” Stops Proving Anything

AI makes voices, faces, and behavioral biometrics easy to counterfeit. Identity checks become theater. Assurance erodes.

· Sagger Khraishi

AI Phishing Kits Sound Like Your Boss

AI phishing kits mimic executives, sync email, chat, and SMS, and regenerate when blocked. The scam didn't change–the scale and polish did.

· Sagger Khraishi

Deepfake-Driven Fraud: The Breach Is Belief

Arup lost $25M to a deepfake meeting. FBI warns AI voice/video scams are surging. The real breach isn’t code - it's belief

· Sagger Khraishi

The paper trail you can’t see

SMBs face realistic PDFs and QR-code phishing that bypass filters and MFA. How the invoice con works - and why scanning isn’t safer.

· Sagger Khraishi

Synthetic Identity & The Liveness Illusion

Synthetic identity fraud is exploding as gen-AI overlays bypass liveness; virtual camera injection and face swaps surged in 2024-2025.

· 4Fsh Team

The Skeleton Key: A New AI Jailbreak Threat

Discover how Skeleton Key AI jailbreak poses new cybersecurity challenges and solutions to mitigate this threat.

· 4Fsh Team

Understanding AI Jailbreaks

Explore AI jailbreaks, their risks, and effective strategies to mitigate them in this in-depth analysis.

· Sagger Khraishi

Ethical Considerations for AI Prompting

Venture into the realm of AI prompting ethics, where fairness, privacy, and transparency shape the future of responsible AI innovation.

· 4Fsh Team